Fansubbing Guide

This project aims to become a public guide for aspiring as well as veteran fansubbers that everyone can contribute to. It is currently under construction as many topics are still missing, but will hopefully cover all important areas and roles that go into the fansubbing process. (Please help by improving the pages and filling in TODO entries. More details are provided on the next page.)

To start reading, you can click on the arrow on the right or press your → key to go to the next page, or you can browse the page index on the left.

The project is hosted on Github and available to read online at https://guide.encode.moe/.

Contribution Guidelines

If you are interested in supporting or contributing to this guide, please keep on reading.

If you are not, feel free to skip to the next page.

We are currently still in the early phases of this guide, so any form of contribution, including just giving feedback, is greatly appreciated. Please open an issue on our Github repository with your feedback, or begin working on a Pull Request.

If you are mainly looking for things to work on, refer to the TODO section.

General

Language

The language of this guide is English. American or British English are both acceptable and there is no preference for either.

The only exceptions are pages specific to a particular language, for example with references to online dictionaries or official grammar rule books, or other typographic advices, for example concerning the usage of quotation marks.

When adding such a page, please briefly describe in your Pull Request what the text is about, what topics it covers, and, if necessary, why it only applies to a specific language.

Technology

This guide is written in Markdown and uses Rust’s mdBook to compile the static HTML pages.

In order to build and preview the guide locally, you only need to install mdBook, which can be done via the provided binaries or directly installing via Crates.io, Rust’s package registry:

$ cargo install mdbook

Updating crates.io index

Installing mdbook v0.4.1

Downloaded syn v1.0.38

...

Downloaded 4 crates (501.9 KB) in 0.42s

Compiling libc v0.2.74

...

Compiling mdbook v0.4.1

Finished release [optimized] target(s) in 2m 56s

Once an mdbook executable is installed,

running mdbook serve in the root directory of the guide’s repository

and opening http://localhost:3000 with your browser

will show a preview of the book.

Any changes you make to the source .md files

will cause your browser to be refreshed and automatically reloaded.

$ mdbook serve

[INFO] (mdbook::book): Book building has started

[INFO] (mdbook::book): Running the html backend

[INFO] (mdbook::cmd::serve): Serving on: http://localhost:3000

[INFO] (warp::server): Server::run; addr=V6([::1]:3000)

[INFO] (warp::server): listening on http://[::1]:3000

[INFO] (mdbook::cmd::watch): Listening for changes...

Changes to the theme can be done by editing the .css files in /theme/css/.

For information on adding plug-ins or changing the way the book is built,

see the mdBook User Guide.

Adding a New Page

In order for your page to be accessible,

you need to add it to the SUMMARY.md file.

The title used there will be used in the navigation bar,

so keep it short.

TODO

Various sections are still under construction.

You will occasionally find TODO as verbatim text

or within comments.

Our goal is to have a section with one or more pages for each of the roles specified in the roles page.

Feel free to work on any of the TODO marks

or create a new section.

Currently, we aim to add the following topics in no particular priority:

- Workflow

- Translation

- Edit

- Timing

- Basic Procedure

- Snapping

- Joining, Splitting

- Post-processing (TPP & Useful Scripts)

- Shifting & Sushi

- Karaoke

- Typesetting

- …with Aegisub

- Styling (of dialogue)

- Signs

- Positioning, Layers, Rotation, Perspective, …

- Masking

- Automation Scripts

- Movement & Motion Tracking

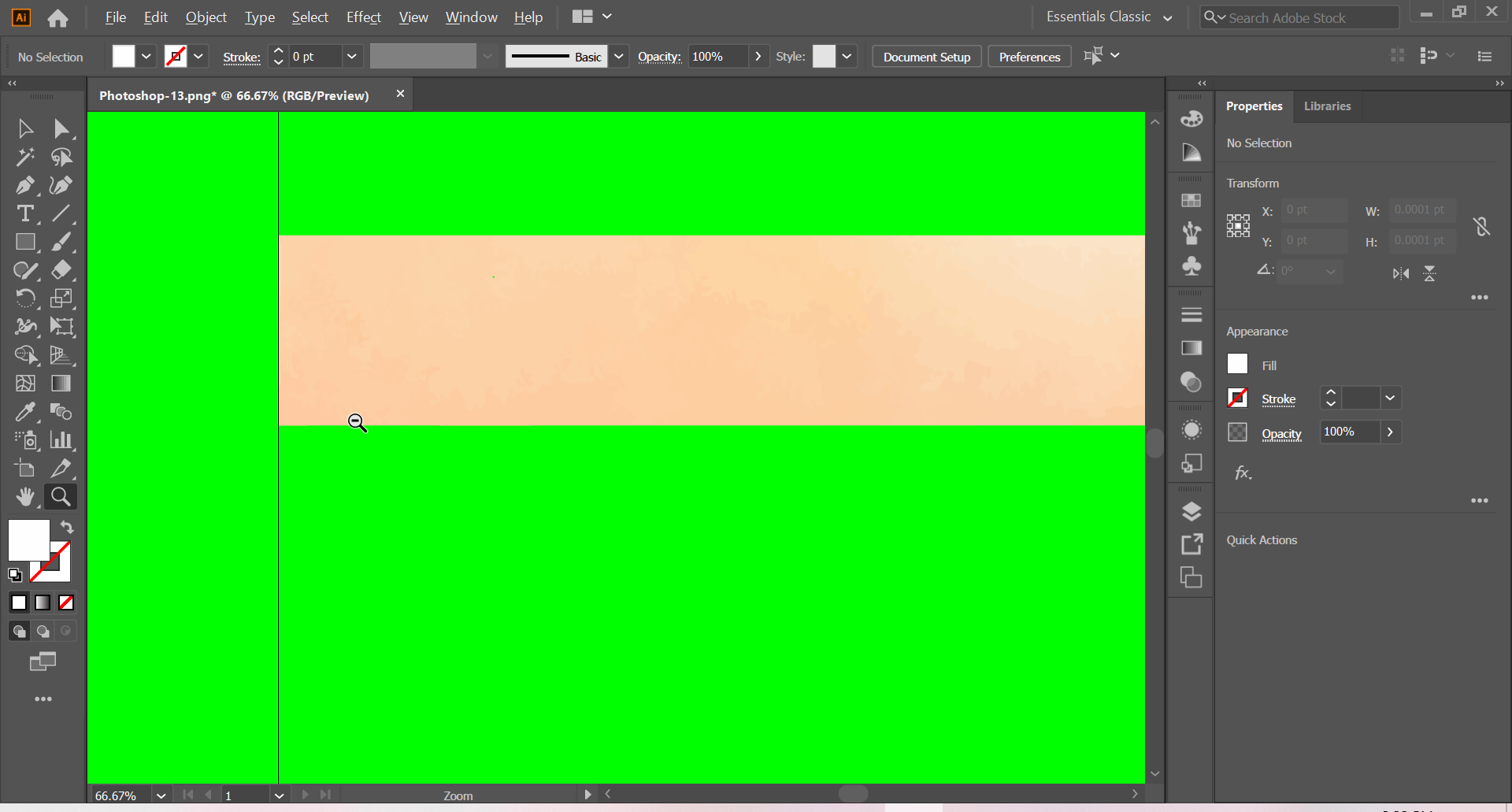

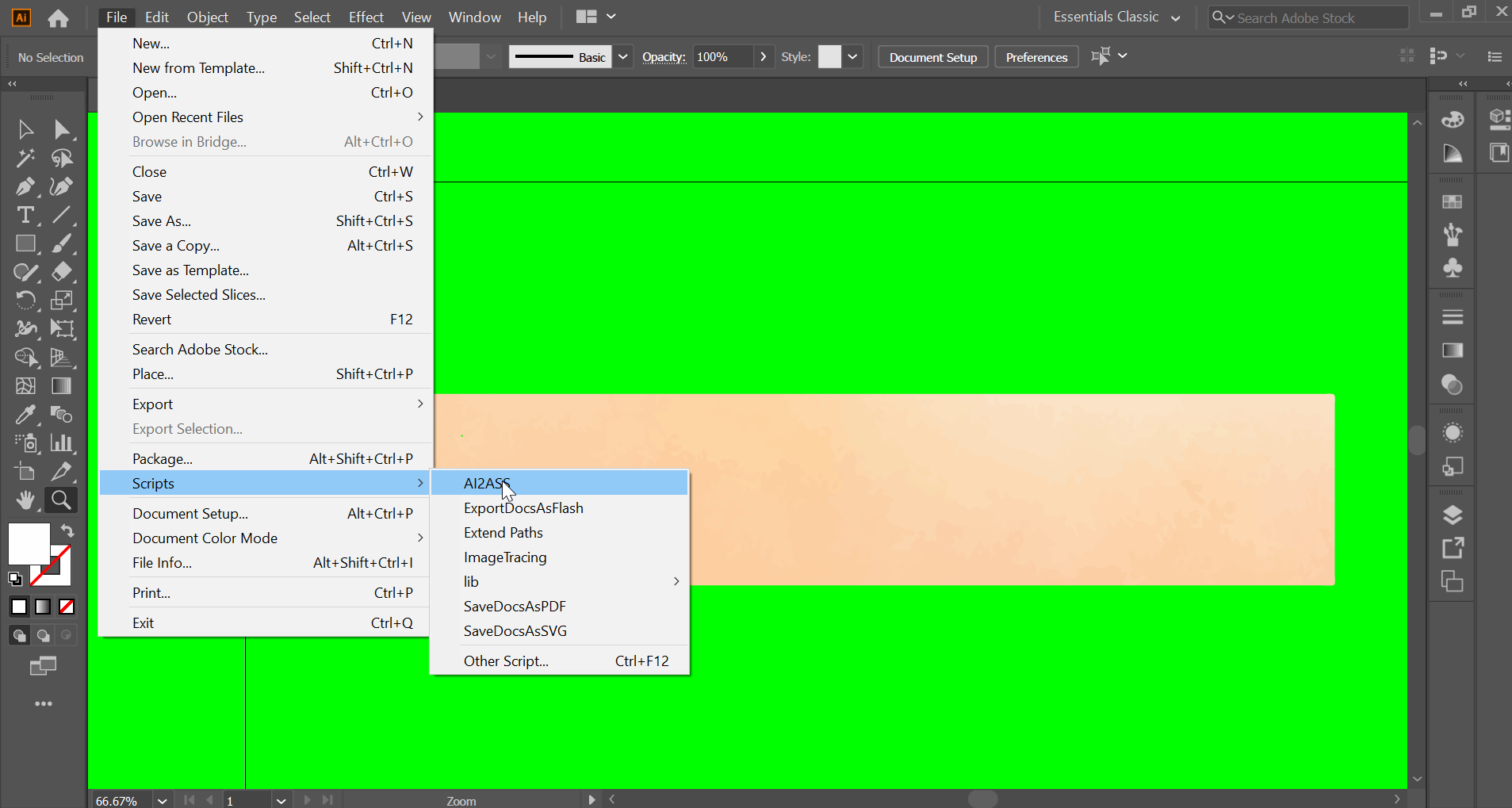

- …with Adobe Illustrator

- (…with Adobe After Effects)

- …with Aegisub

- Encoding [I’m sure there’s something to be done]

- Quality Check

- Karaoke Effects

There is a collection of links here that can be used as reference when working on any future section.

Style Guidelines

The following are the style guidelines for various aspects of this guide. The most important aspect is having Semantic Linefeeds. The other points may serve as guidelines for formatting future pages. Refer to the Markdown Guide for guidelines on visual formatting.

Semantic Linefeeds (!)

Always use Semantic Linefeeds when editing text. They are used to break lines into logical units rather than after a certain line length threshold is reached!

They drastically improve sentence parsing in the human brain and make code diffing much more simple compared to hard-wrapping at 80 columns. You should still aim not to exceed 80 columns in a single line, but unless you are writing code or URLs, you will most likely not have any problems with this. Markdown will collapse adjacent lines into a paragraph, so you don’t have to worry about the rendered result.

As a rule of thumb, always start a new line on a comma, a period, any other sentence terminating punctuation, parenthesized sentences (not words), or new items in a long list (such as the one you are reading right now).

Indentation

The indent size is two spaces.

Lists

Unordered list lines should be indented once,

while ordered lists are indented twice.

The text of an unordered item should have one space after the -,

while the text of an ordered item

should start on the fourth column

(start every line with the number 1).

- This is an unordered list

- With a sublist

- And another item in that sublist

1. This is an ordered list

1. Another list item

…

1. Last entry of the list

Blank Lines

All block lists should be separated from text with a blank line on each side. The same applies to code blocks.

Separate headings from text with two blank lines before the heading, and one after. Headings immediately following their parent heading only need one blank line in-between.

Additionally, separate text from end-of-section hyperlink lists with one blank line before the list. For image embeds, there should be a blank line on each side.

Horizontal rules can be useful for splitting subsections or

as a visual guide to where the next explanation begins.

They are created with a sole --- on its own line,

and must have a blank line on each side.

Hyperlinking

There are three types of hyperlinks.

- The text you want highlighted is more than one word,

or different than the shorthand name of the link.

[website's great article][short]- website’s great article

- The text you want highlighted is the same as the shorthand.

[short][]- short

- You want the full address displayed.

<https://guide.encode.moe/>- https://guide.encode.moe/

For the first two hyperlinking styles, you will want to include a line at the end of that header section in the following format.

[short]: https://guide.encode.moe/

If there are multiple links used in the first two styles, you will want multiple lines at the end of the header section.

[short1]: https://guide.encode.moe/

[short2]: https://guide.encode.moe/CONTRIBUTING.HTML

…

For relative links (links to other pages, images, or files within this repository), follow the guidelines for Jekyll Relative Links.

Section Linking

If you are linking to a section on the same page,

[section name](#header) is allowed in-line.

An example of this is the hyperlink section you are reading.

In markdown, this is simply [the hyperlink section you are reading](#hyperlinking).

Section names are converted to all lowercase,

replacing spaces with a - dash,

while disregarding all non-alphanumeric characters

with the exception of the literal - dash being kept.

Therefore, a section named $aFoo-Bar b2 ! can be referenced

as foobar.md#afoo-bar-b2-.

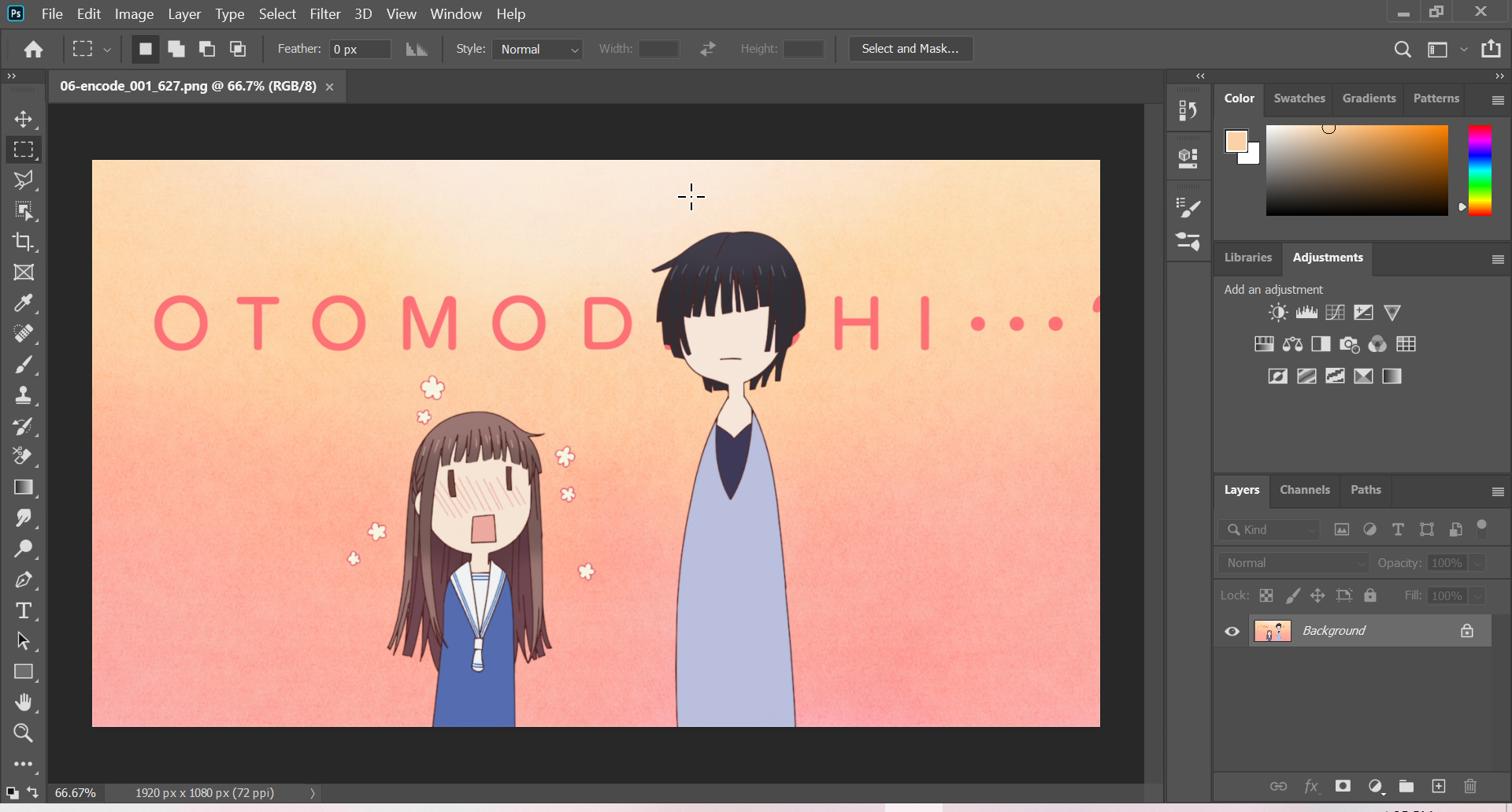

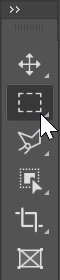

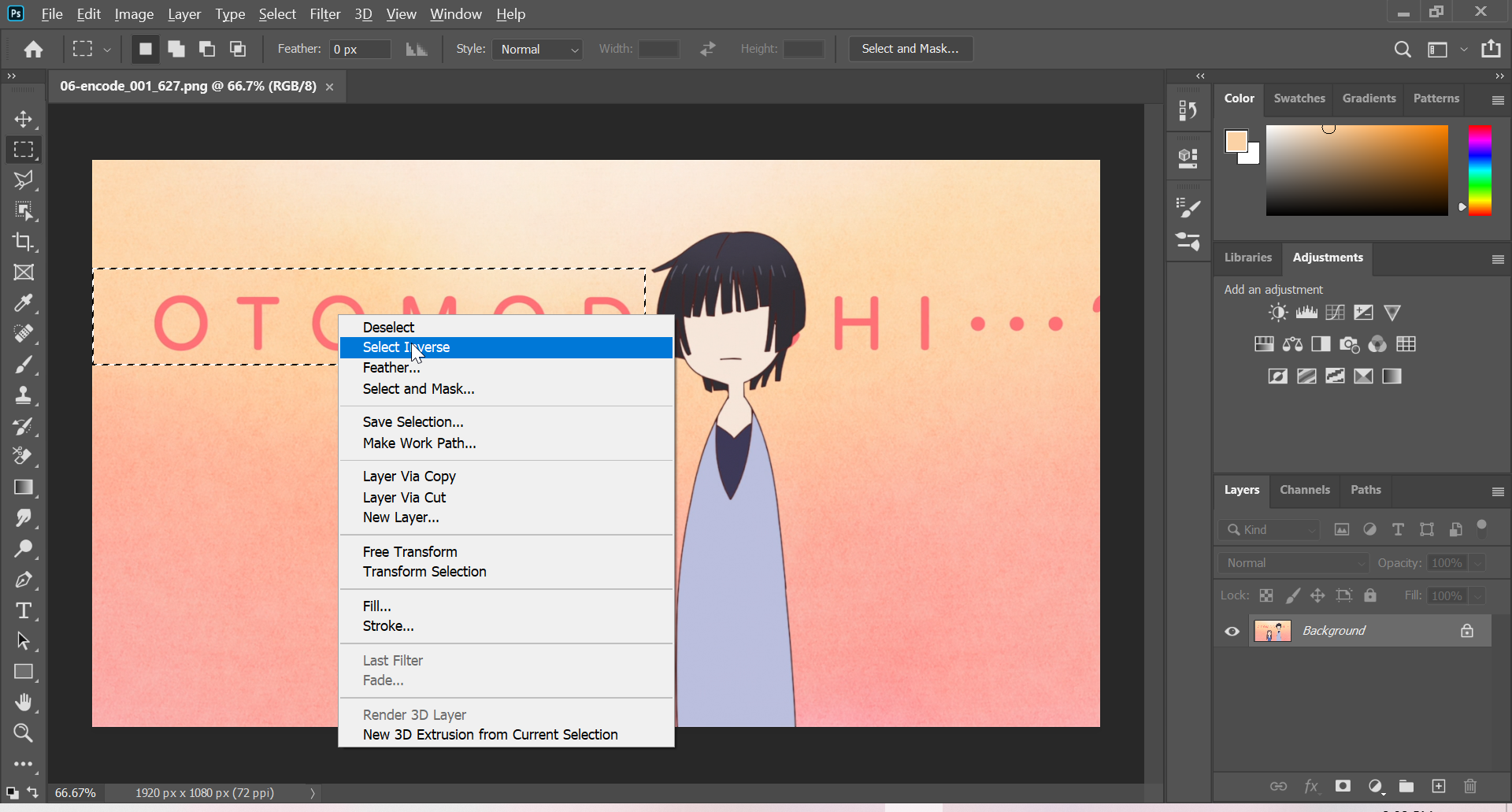

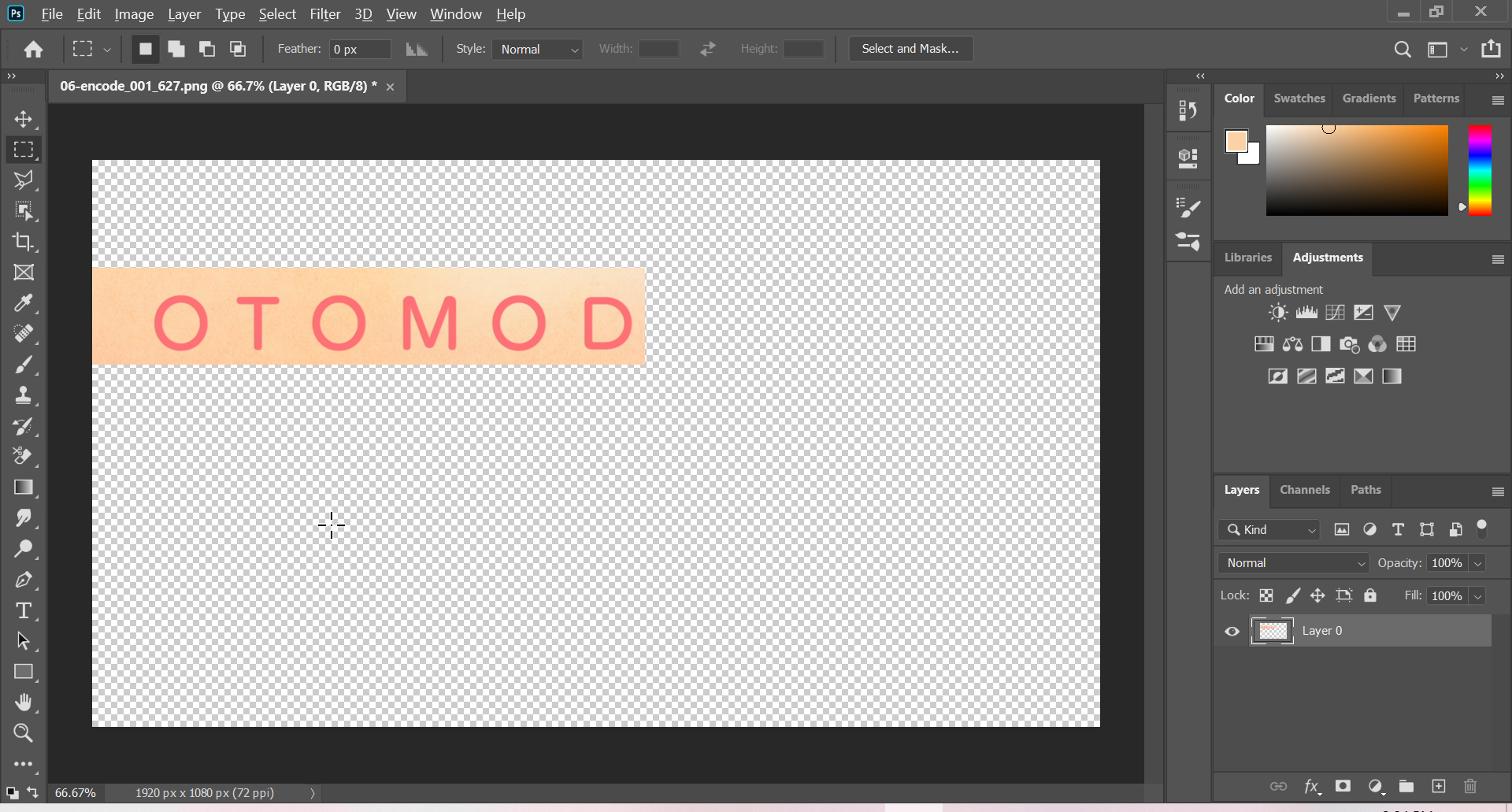

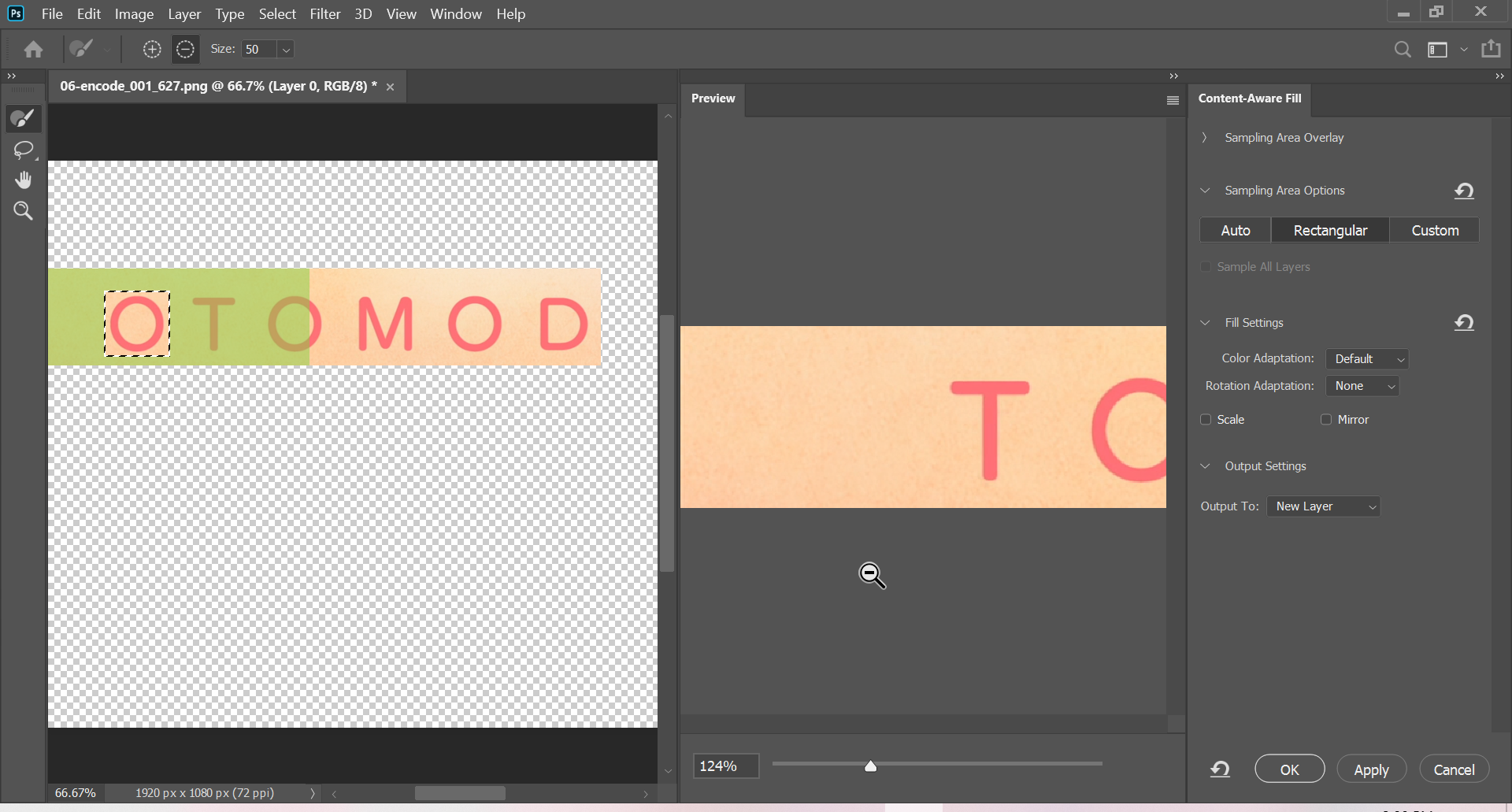

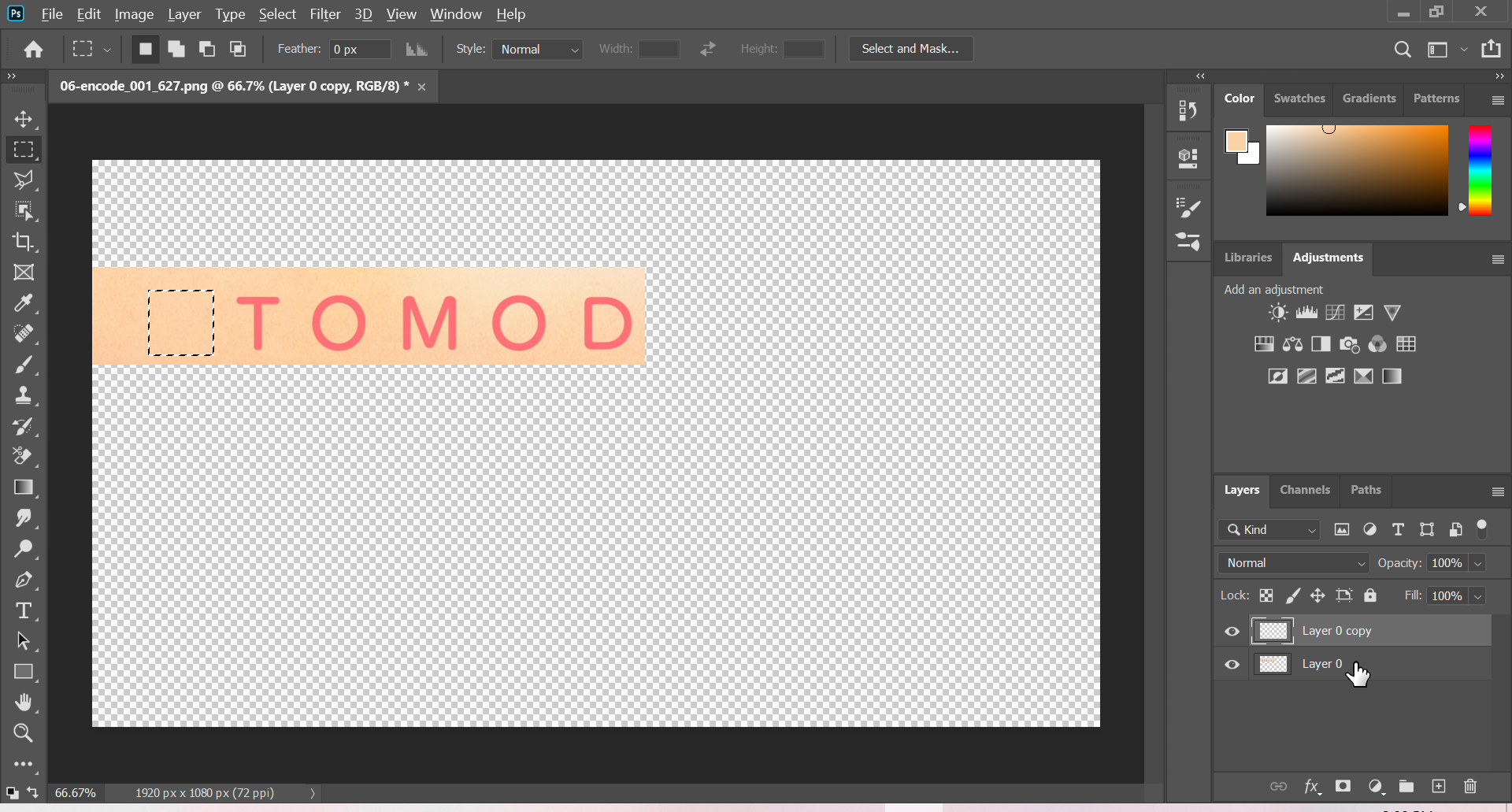

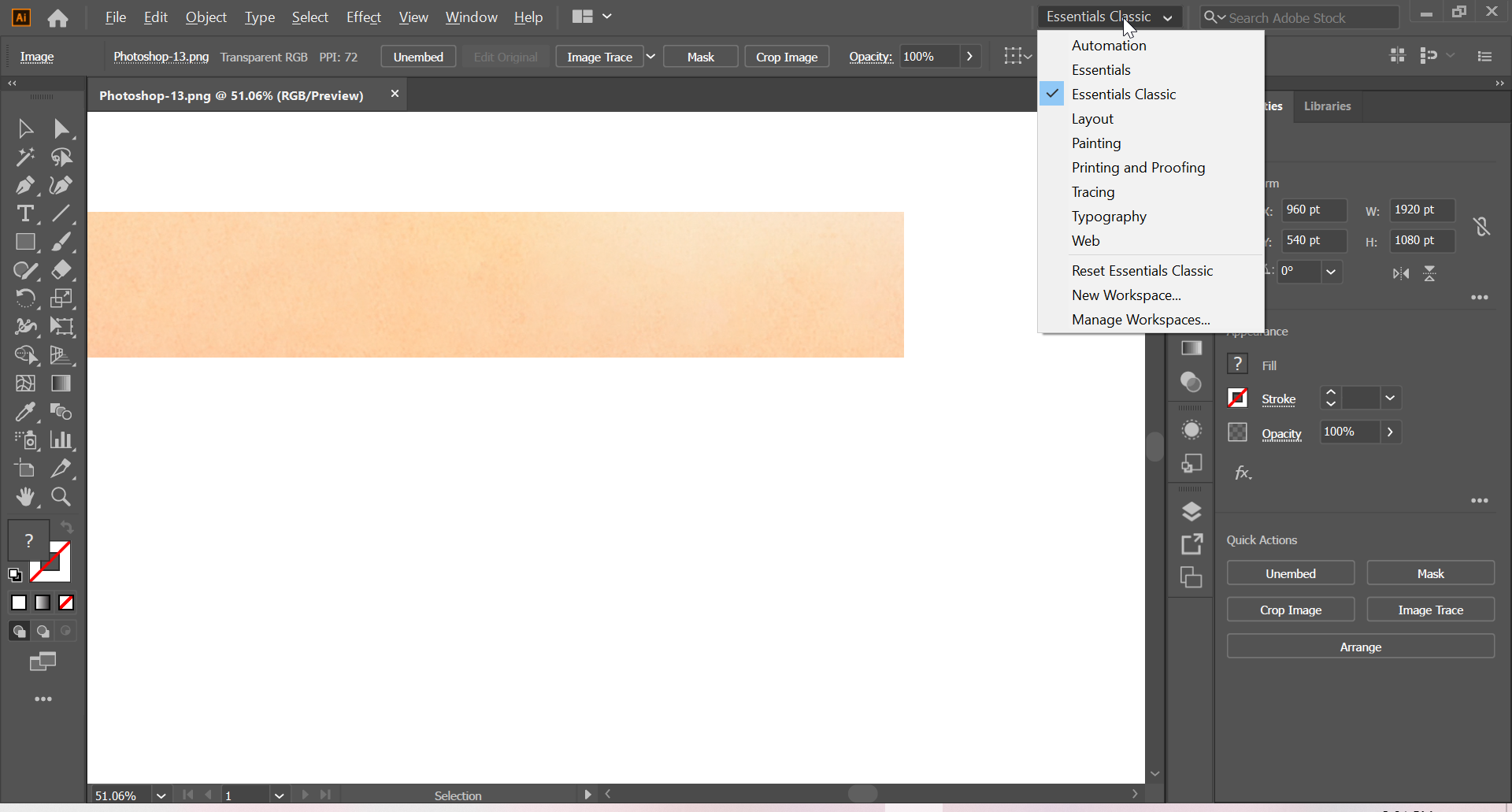

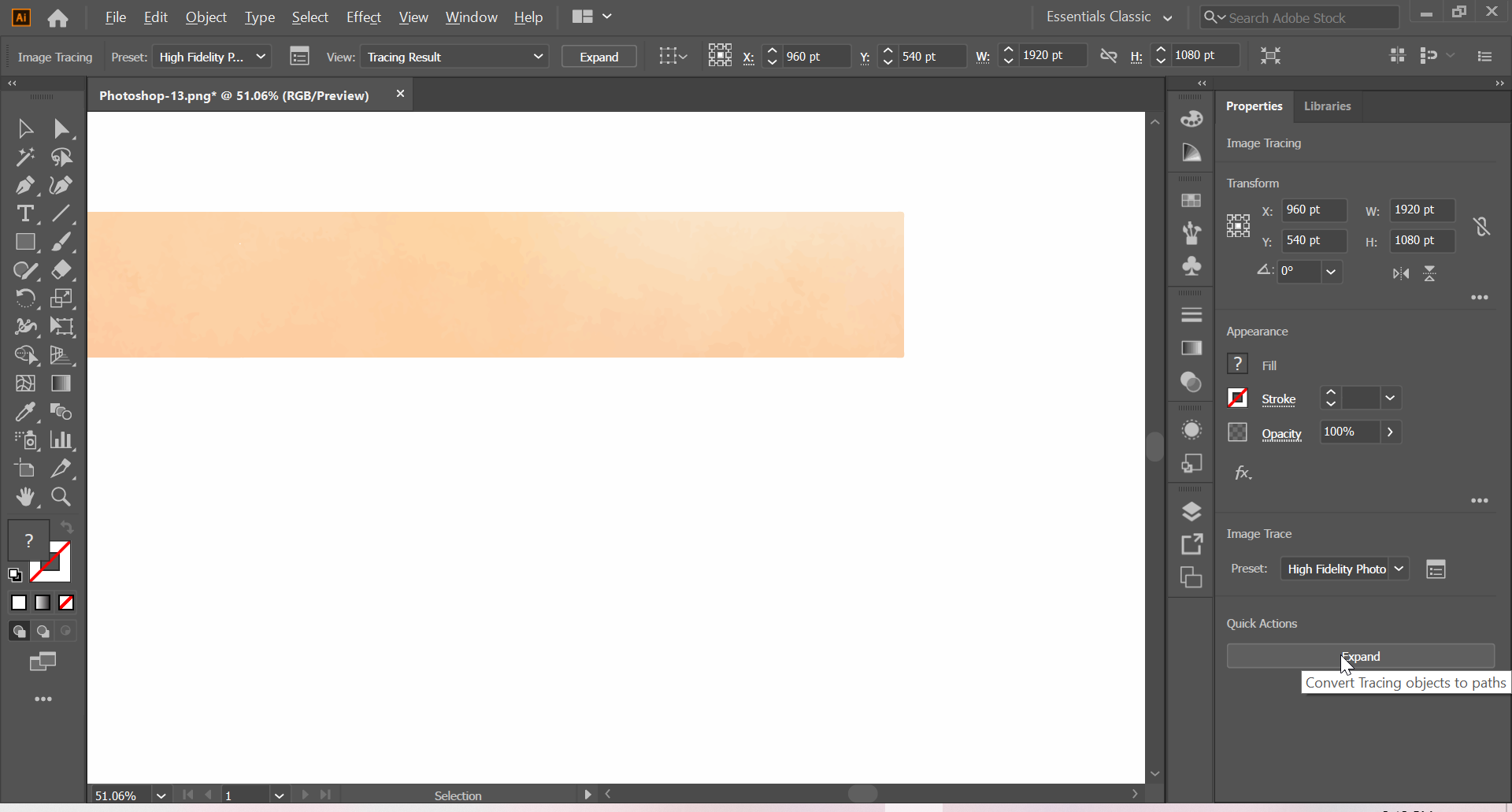

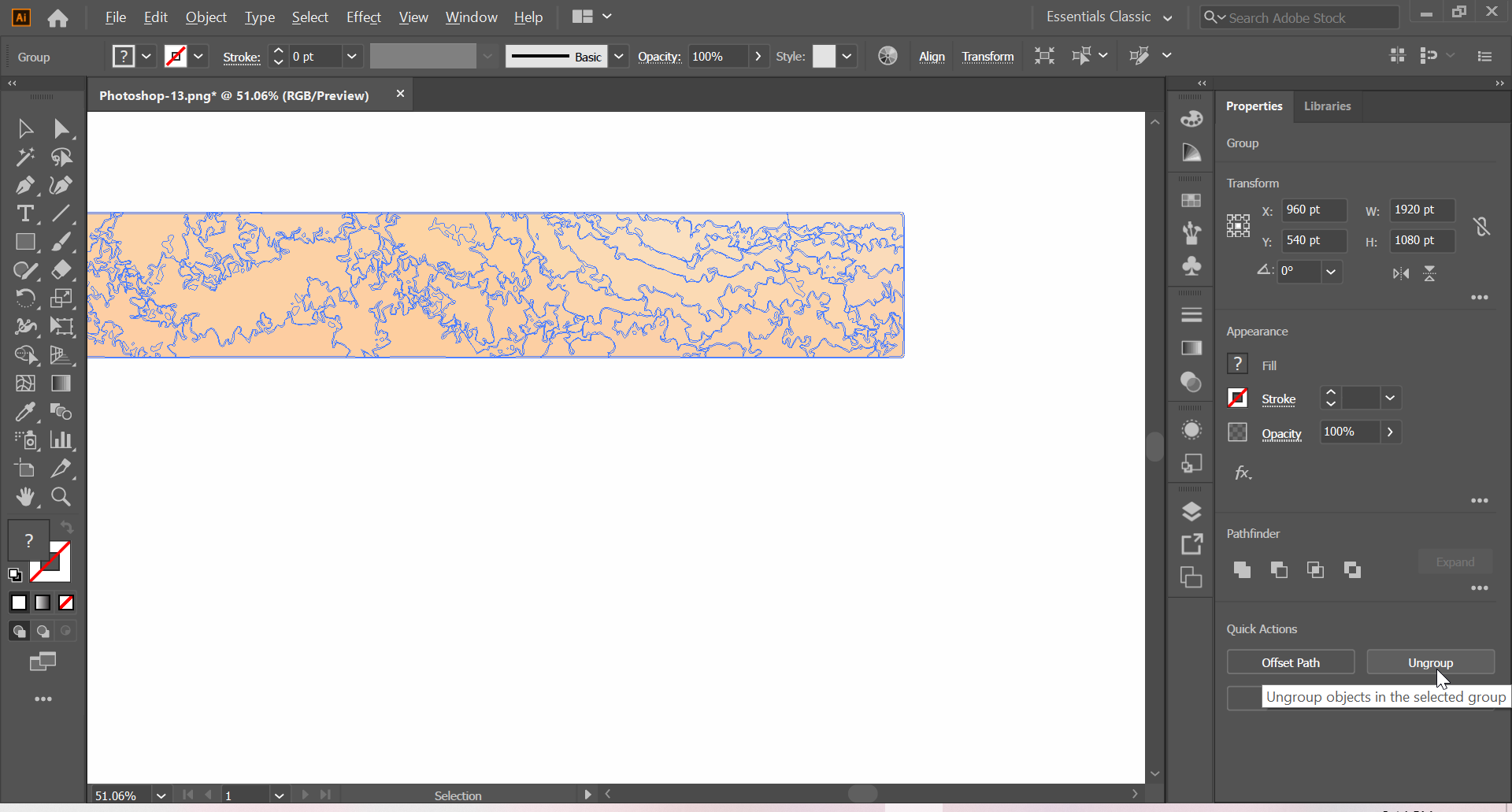

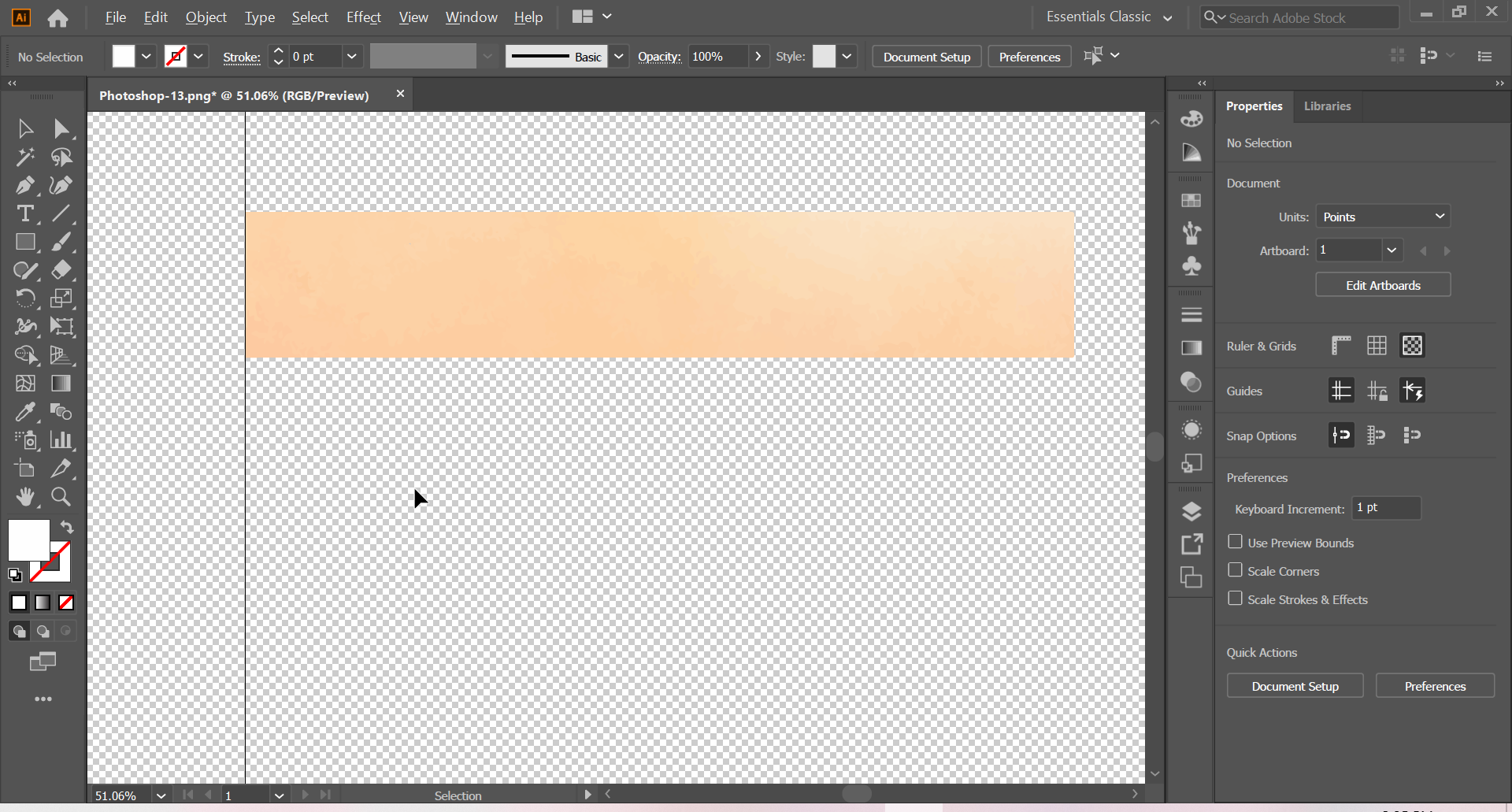

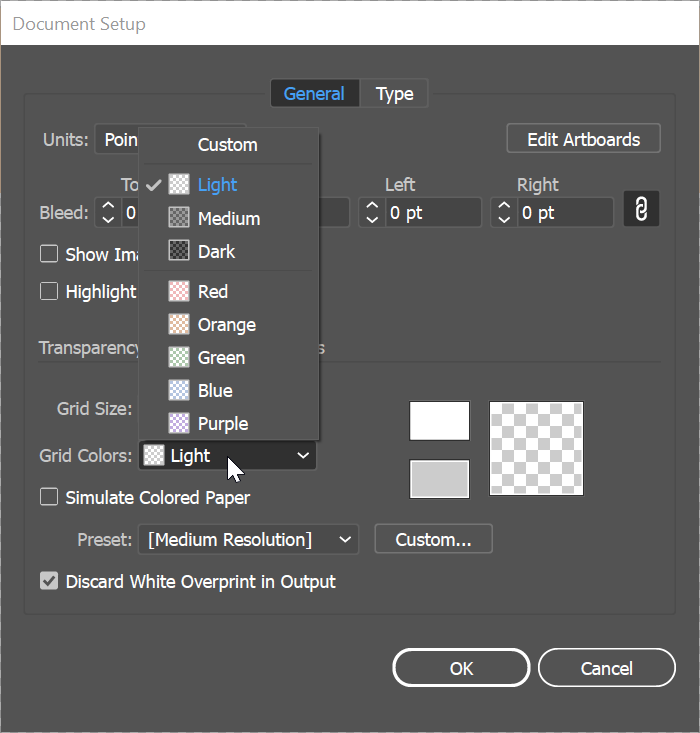

Adding Images

When adding images to your paragraphs, use the following syntax1:

*Visible caption text*

Make sure your image is separated from other images or text with a blank line above and below, as this will align them correctly and allow for the caption to be displayed.

Try to avoid adding lossy images to the guide

(all screenshots should be lossless from the source).

Also, make sure your image is compressed as much as possible

before committing it.

This can be done with pingo’s

lossless PNG compression: pingo -sa file.png.

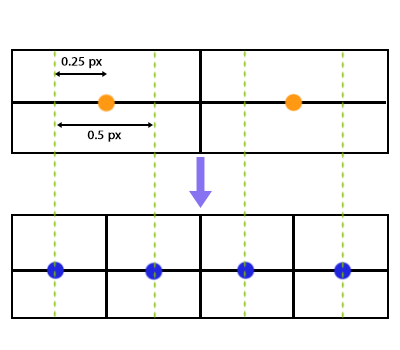

When extracting frames directly from a VapourSynth pipline

where the format might be vs.YUV420P16 (YUV 4:2:0, 16-bit),

convert your image to vs.RGB24 (RGB 8-bit) before saving as a PNG.

This is because many, if not all, browsers don’t support

images with bit-depths higher than 8 bpp,

and the dithering behavior of some browsers may be different from others

or poorly executed.

You can change the format and bit-depth while saving to a PNG file with the following lines:

# replace `{frame}` with the frame number of the clip you are extracting

out = core.imwri.Write(clip[{frame}].resize.Bicubic(format=vs.RGB24, matrix_in_s='709', dither_type='error_diffusion', filter_param_a_uv=0.33, filter_param_b_uv=0.33), 'PNG', '%06d.png', firstnum={frame})

out.get_frame(0)

Citations

If you are archiving another website’s text or copying their images into this repository, make sure to cite your sources using APA formatting. To generate APA citations, use PapersOwl. Only use this if you fear the website is not a permanent source.

For mid-document citations, use “in-text citations” with footnotes for the full citations. For a full document citation, simply place the full citation at the bottom of the document, under a horizontal rule.

Footnotes

Footnotes can be used for information that would interrupt

the flow or purpose of a paragraph,

but may still be of interest.

They are created with [^#] in-text,

and an additional [^#]: Text here... at the bottom of the page,

separated by a horizontal rule ---,

where # is to be replaced with an increasing

and per-page unique number.

Info/Warning boxes

Info boxes can be used similarly to footnotes, but for information that the reader might want to know before continuing to read the rest of the page.

Warning boxes are similar but are for information that is necessary for the reader to know before continuing to read the rest of the page.

The current syntax uses in-line HTML to render these paragraphs with a different CSS style. These paragraphs must be separated with a blank line above and below similar to images or code blocks.

<div class="info box"><div>

Text here as usual, using semantic linefeed rules.

The blank lines around HTML text are needed

to enable inline Markdown parsing,

for example for text formatting.

</div></div>

<div class="warning box"><div>

This class should be used for important information.

</div></div>

Punctuation

Use the ASCII symbols " and '

for quotation and apostrophe respectively

over the Unicode versions “, “, and ’.

They will be converted during the build process

and in most situations,

text editing tools will work better

with the generic ASCII symbols.

Mathematics with MathJax

This guide has MathJax support,

so in-line or block mathematics can be rendered with TeX.

This obviously requires knowledge of TeX syntax and the supported functions

listed in the MathJax documentation.

To start in-line formulas, the syntax is \\( ... \\).

On the other hand, the block formulas’ syntax is:

$$

...

$$

Similar to ``` fenced code blocks, separate these blocks with one blank line on either side.

This differs from normal Markdown image syntax,

by abusing CSS tags to render the Visual caption text

centered and under the image.

This may be changed in the future with a plug-in.

Preface

What does it take to be a fansubber?

While I’d like to say that the most and only important thing is a healthy (or unhealthy) love of anime, that would be a lie. Being a fansubber takes a lot of work—it can be like having a second job, depending on how many projects you pick up. Even though fansubbers provide a free product as volunteers, there are still expectations that you will put in the time and effort to complete your part in a timely manner.

Now, I don’t want to scare you away, but I do want you to be aware of what you’re getting into.

The most successful fansubbers are often those with lots of spare time such as students, physically disabled persons, and single adults without many commitments outside of work. While an unrestricted schedule isn’t a hard requirement, it is something to keep in mind as you start this process.

That said, some roles can be a means of keeping up or honing a skill. The translator, editor, and quality checker roles are particularly suited to this situation as they require skills that are easily applicable to careers outside of fansubbing. However, these roles are also incredibly difficult to teach, especially with the resources available to fansubbers, so if they are addressed in this guide, it will not be in as much depth as other roles.

If you don’t even know what roles there are to choose from, don’t worry—we’ll get there. For now, let’s move on to some practical requirements.

Roles

There are 8 (sometimes 9) major roles in every fansub group. They are:

- Encoder

- Timer

- Typesetter

- Editor

- Quality Checker

- Optional: Translator

- Optional: Translation Checker

- Optional: Karaoke Effects Creator

- Optional: Project Leader

In this guide, we will only be providing in-depth guides for the Encoder, Timer, and Typesetter roles. However, Quality Checkers are often expected to be familiar with most or all of the roles in order to recognize errors.

This page serves as just an overview of the work various roles will be expected to complete.

Encoder

Time commitment per episode: 20 minutes - 2 hours active (4-12 hours inactive)

Encoders (sometimes abbreviated as ENC) are responsible for the audio and video. They will generally be provided with one or more video sources and are expected to produce the best video possible within reason.

This is done with a frame-by-frame video processor such as AviSynth and VapourSynth1, a video encoder such as x264 or x2652, and audio tools such as eac3to, qaac, and FLAC3. This is not a comprehensive list, but it does represent the broad categories of tools required.

Encoders are expected to have a high level of skill and understanding of video concepts and tools. It is perhaps the most technically challenging role in fansubbing. However, much of the work is repeatable, as each episode in a show will usually be very similar to every other one. It will also get easier over time as they become more familiar with the concepts and tools.

One last note about encoding: there are as many opinions about how to fix video problems as there are encoders. Encoders can and often do become contentious about their work, theories, and scripts. It’s important to keep in mind that a disagreement is not always an insult, and more experienced encoders often just want to help and provide feedback. The important part is the result!

Timer

Time commitment per episode: 20 minutes - 4 hours

The Timer (abbreviated TM) is responsible for when the text representing spoken dialogue shows up on screen.

The timing of subtitles is much more important than one might assume. The entrance and exit times of the subtitles, or a fluid transition from one line to the next, can make a large impact on the “watchability” of the episode as a whole. Take, for example, the following clip from Eromanga-sensei:

On the left are the official subtitles from Amazon’s AnimeStrike, and on the right is a fansub release. There are many problems with Amazon’s subtitles: entering and exiting the screen up to two seconds late, presenting 4-5 lines on screen at once, and not separating dialogue based on speaking character. These problems detract from the viewing experience, drawing attention to the appearance of the subtitles and distracting from the story and video.

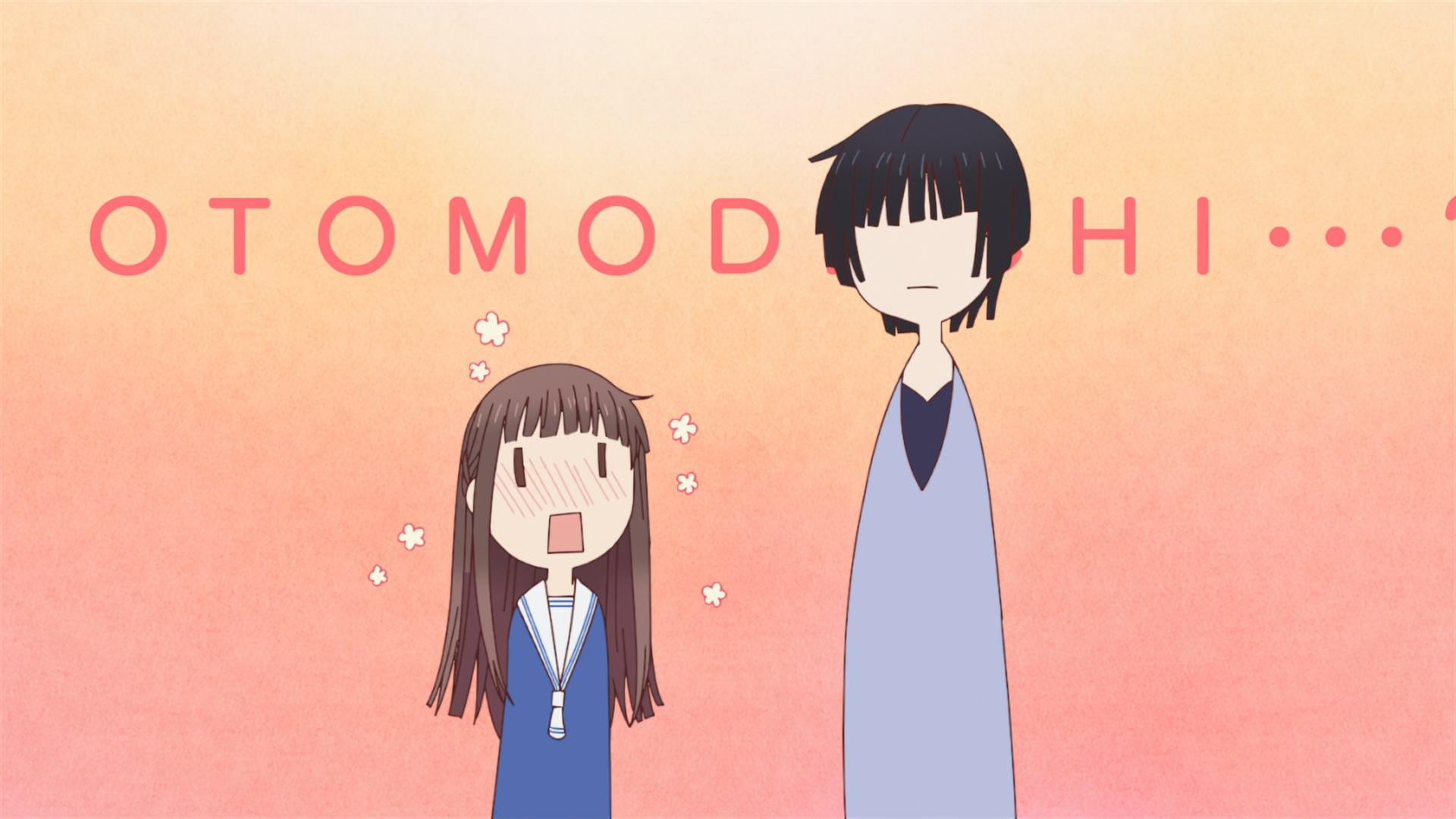

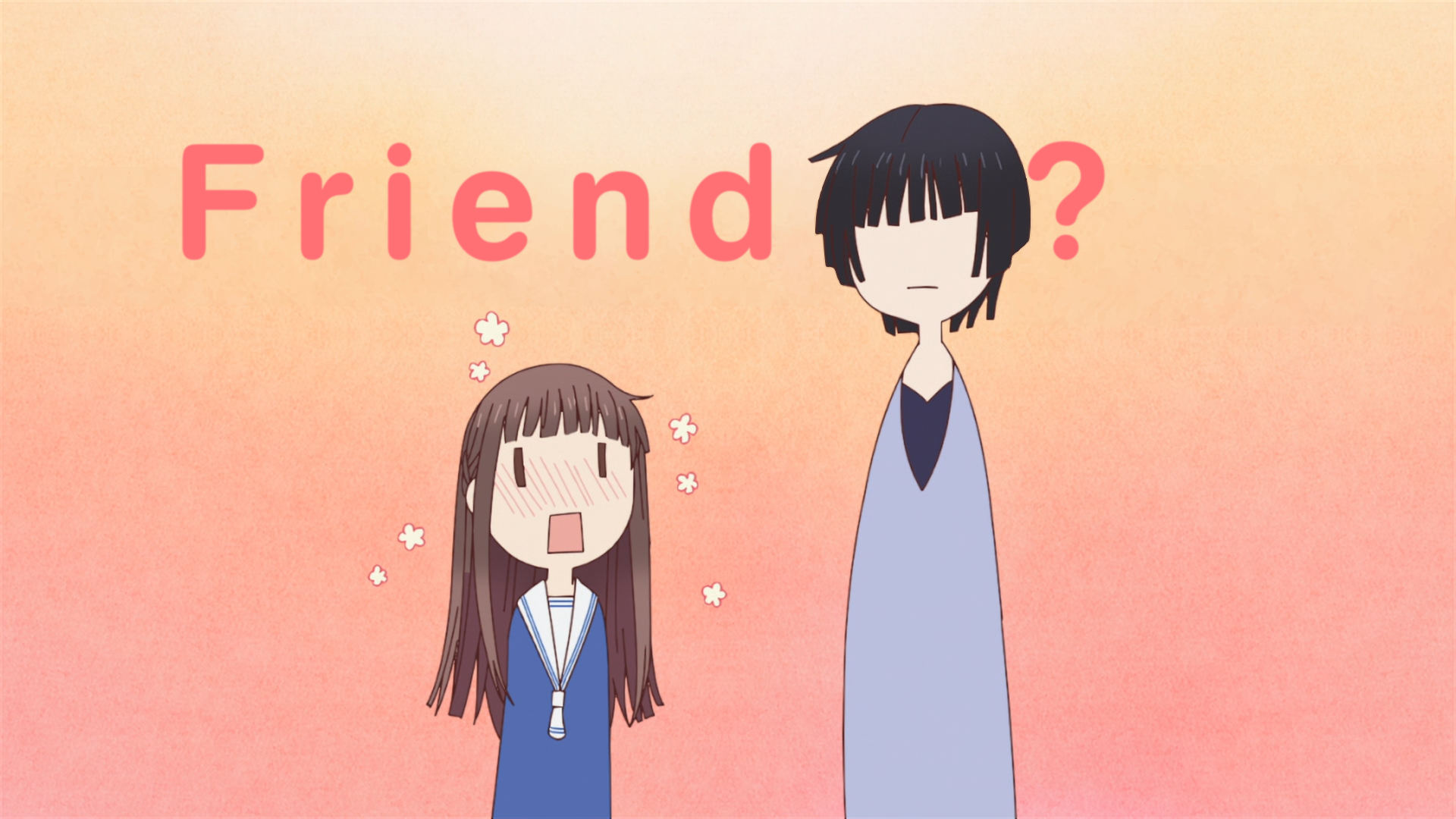

Typesetter

Time commitment per episode: 20 minutes - 8+ hours (dependent on number and difficulty of signs)

Typesetters (abbreviated TS) are responsible for the visual presentation of translated text on-screen. These are generally called signs.

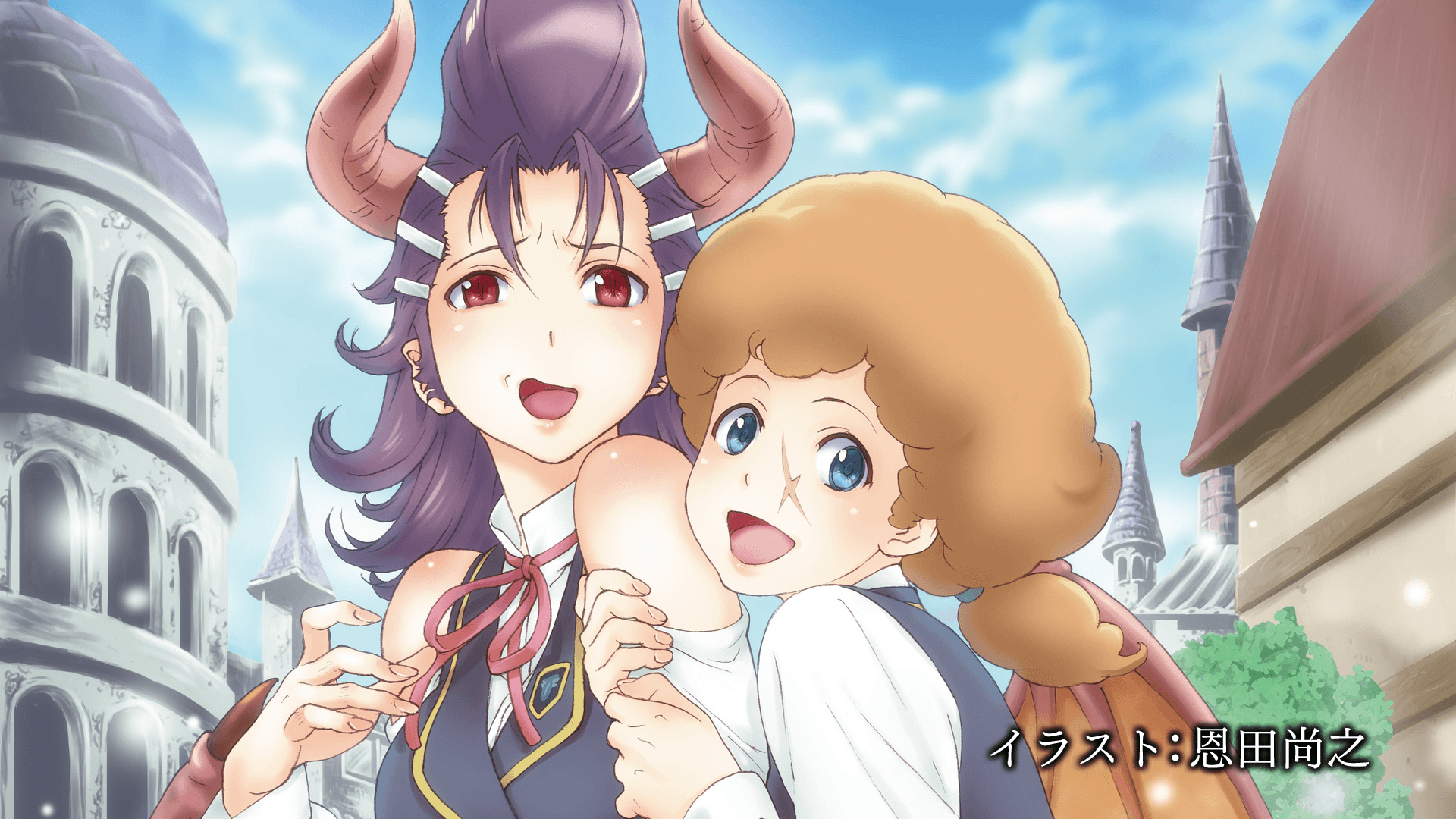

For example, given this scene and a translation of “Adachi Fourth Public High School”…

[DameDesuYo] Eromanga-sensei - 01 (1920x1080 10bit AAC) [05CB518E].mkv_snapshot_03.11_[2017.08.18_21.14.55].jpg

[DameDesuYo] Eromanga-sensei - 01 (1920x1080 10bit AAC) [05CB518E].mkv_snapshot_03.11_[2017.08.18_21.14.55].jpg

the Typesetter would be expected to produce something like this:

[DameDesuYo] Eromanga-sensei - 01 (1920x1080 10bit AAC) [05CB518E].mkv_snapshot_03.11_[2017.08.18_21.14.43].jpg

[DameDesuYo] Eromanga-sensei - 01 (1920x1080 10bit AAC) [05CB518E].mkv_snapshot_03.11_[2017.08.18_21.14.43].jpg

Almost every sign the Typesetter works on will be unique, requiring ingenuity, a wild imagination, a sense of style, and a high degree of attention to detail. The Typesetter’s goal is to produce something that integrates so well into the video that the viewer does not realize that it is actually part of the subtitles.

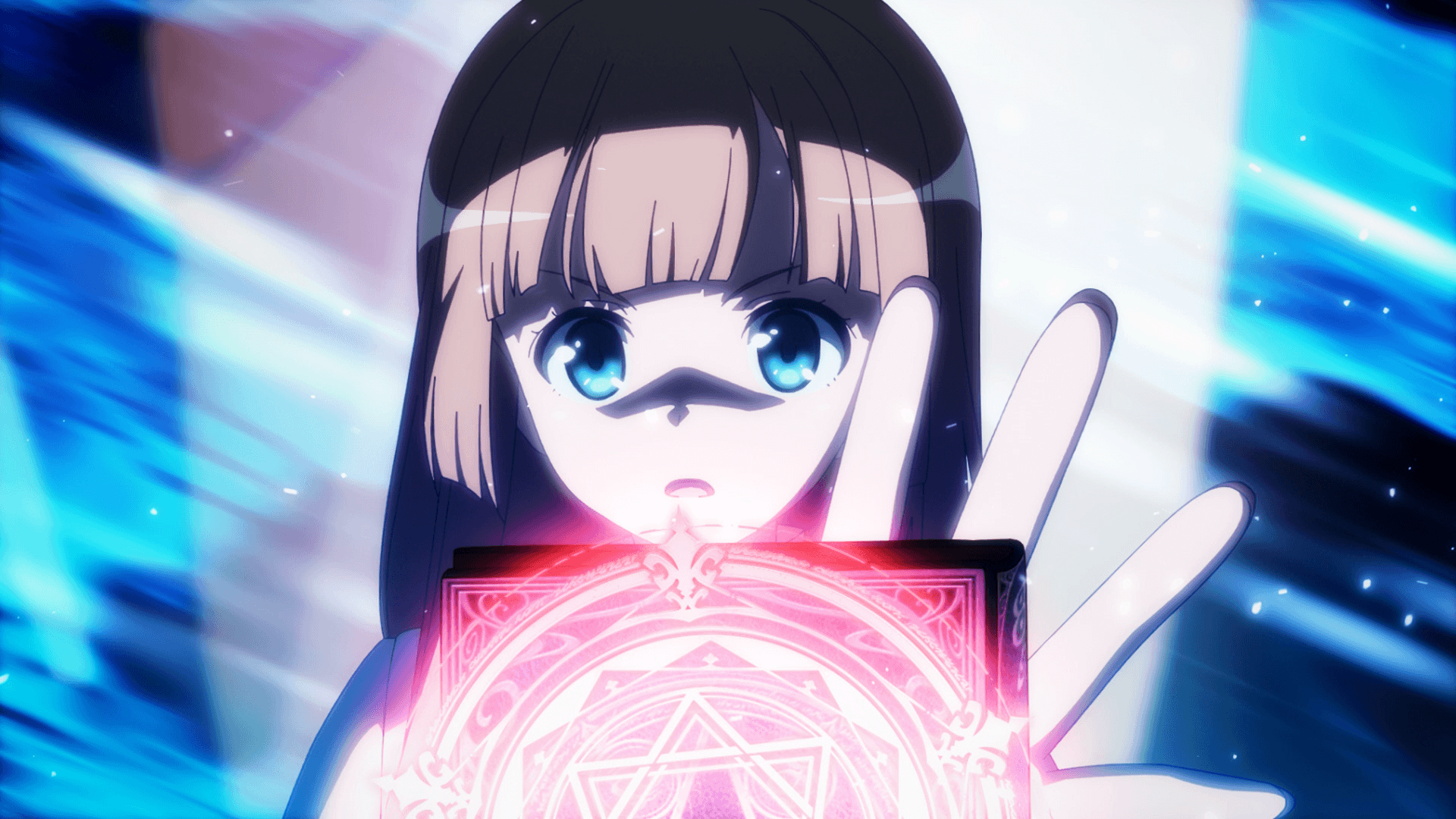

The sign above is actually one of the more simple kinds that the Typesetter might have to deal with. It is static, meaning it does not move, and has plenty of room around it to place the translation. Other signs will be much more difficult. Take for example this scene from Kobayashi-san Chi no Maid Dragon:

Though it may be hard to believe, the typesetting on the right side of the screen was done entirely with softsubs (using Aegisub), subtitles that can be turned on and off in the video player as compared to hardsubs (using Adobe After Effects) which are burned in. Each group and language “scene” will have different standards in regards to soft and hardsubs. For example, in the English scene, hardsubs are considered highly distasteful, whereas in the German scene they are readily accepted.

Something to remember about typesetting is that there is no one way to typeset a sign. There are, however, incorrect ways that are not visually pleasing, do not match the original well, are difficult to read, or are too heavy (meaning computer resource intensive).

Editor

Time commitment per episode: 2-4+ hours

The Editor (sometimes abbreviated ED) is responsible for making sure that the script reads well. Depending on the source of the script, this may mean grammatical corrections and some rewording to address recommendations from the Translation Checker. However, more often than not, the job will entail rewriting, rewording, and characterizing large portions of the script. Each group will have different expectations of an Editor in terms of the type, style, and number of changes made. The Editor may also be responsible for making corrections recommended by the Quality Checkers.

Quality Checker

Time commitment per episode: 30 minutes to 4 hours (depending on your own standards)

Quality Checkers (abbreviated QC) are often the last eyes on an episode before it is released. They are responsible for ensuring that the overall quality of the release is up to par with the group’s standards. They are also expected to be familiar with the workflow and many intricacies of every other role. Each group has a different approach to how the Quality Checker completes their work. For example, one group might require an organized “QC report” with recommended changes and required fixes, while other groups may prefer the Quality Checker to make changes directly to the script whenever possible.

Translator & Translation Checker

Time commitment per episode: 1-3 hours for translation check, 4+ hours for an original translation (dependent on the skill of the TL/TLC and the difficulty of the show’s original script)

The job of the Translator (abbreviated TL) and the Translation Checker (abbreviated TLC) is to translate and ensure the translational quality of the script and signs respectively. This is perhaps an obvious statement, but it bears explaining just in case. Today, many shows are simulcast by one or more companies, meaning scripts will be available either immediately or soon after airing in Japan. In these cases, some fansub groups may choose to edit and check the simulcast script rather than translate it from scratch. This depends almost entirely on the quality of the simulcast. Fixing a bad simulcast script may be harder than doing an original translation (abbreviated OTL). Finally, translators are responsible for transcribing and translating opening, ending, and insert songs as well.

Karaoke Effect Creator

Time commitment: several hours, once or twice per season

The Karaoke Effect Creator (abbreviated KFX) styles and adds effects to the lyrics and sometimes romaji and/or kanji for opening, ending, and insert songs. This can be very similar to typesetting but utilizes a different set of tools and can be highly programming-oriented.

TODO - sources for AviSynth and VapourSynth builds relevant to fansubbing.

Further reading on the x264 and x265 libraries can be found here.

Comparisons of various audio codecs can be found here.

Requirements

Language

There are fansub groups for almost every language in the world. So, while anyone is welcome to read and use this textbook, I recommend applying your skills in a fansub group centered on your native language. Of course, there are some roles that don’t require any language skills to complete them. But you will still need to communicate with the other members of your chosen fansub group, and a language barrier can make that difficult.

Hardware

Every fansubber will need a computer. Some roles will have higher requirements. Below are some minimum recommended computer specifications based on the role. Can you do the job with less than what’s below? Probably, but it could make your job much harder than it needs to be.

- Timer, Editor, Translator, Translation Checker

- Some of the most forgiving roles in fansubbing for computer hardware.

- OS: Windows 7, Mac OS X 10.7, Linux

- Screen: 720p

- CPU: dual-core >2Ghz

- Computer should be able to playback HD anime with subtitles.

- Memory: 4GB

- Aegisub loads the entire video into memory. With larger HD videos being standard today, this could be up to several GB.

- Storage: 50GB available

- Mouse: recommended

- Internet: 25 Mbps download

- Typesetter, Quality Checker

- The middle of the road in terms of required computer hardware.

- OS: Windows 7, Mac OS X 10.7, Linux

- 64-bit recommended

- Screen: 1080p

- CPU: dual-core >2.5GHz (quad-core >3GHz recommended)

- Computer should be able to playback modern fansubbed anime releases with high settings.

- Memory: 8GB

- Aegisub loads the entire video into memory. With larger HD videos being standard today, this could be up to several GB.

- Windows loads installed fonts into memory on boot. For typesetters, the font library could grow to be several GB.

- Storage: 100GB available

- Mouse: required

- Internet: 25 Mbps download, 5 Mbps upload

- Encoder

- The most demanding role in terms of computer hardware.

- The speed and capabilities of the computer directly correlate to encode times and the stability of encoding tools.

- OS: Windows 7, Mac OS X 10.7, Linux

- 64-bit required

- Screen: 1080p

- IPS panels highly recommended for color correctness.

- VA panels highly discouraged.

- CPU: quad-core >4GHz

- More cores and/or higher speed are better (e.g. AMD Ryzen, Threadripper or Intel Core i7+).

- CPU Requirements:

- Hyperthreading

- AVX2

- SSE4

- Memory: 8GB

- Memory can be a bottleneck when encoding. More, faster memory is always better for encoding rigs.

- Storage: 500GB available

- Encoders sometimes deal with files up to 40GB each and regularly with ones between 1GB and 8GB and may be required to retain these files for a long time.

- Internet: 25 Mbps download, 25 Mbps upload

Software

Every role will have different required software, but it is recommended for every role to have installed Aegisub. It is highly recommended to use CoffeeFlux’s builds. They come pre-equipped with Dependency Control and several critical fixes to Aegisub that have not been merged into the official application.

More specifics will be presented in the chapters devoted to each role.

TODO - pages for each role

Programming

Prior knowledge of some programming languages can be extremely useful for fansubbing, though it is not required. Specifically, Lua and Moonscript are useful for Typesetters. Encoders will find that Python is used to interact with VapourSynth, so learning it ahead of time will be to their advantage.

Collaboration with FTP

In a fansub group, multiple users will need to be able to access and modify a myriad of files. An easy and straightforward way to do this would be by setting up an FTP server. This makes it easy for users to share their files privately, as well as make bigger files easy to find and download by the right people.

FTP setups are very common in fansubbing for this exact reason. An FTP servers offers an easy place for translators and typesetters to upload their subtitle files, as well as for encoders to upload their premuxes or grab source files from.

Setting Up an FTP

TODO: Describe how to set up a basic FTP server, make it connectable, etc.

Folder Structure

TODO: Describe reasonable

Avoiding Filename Conflicts

TODO

Security

TODO: Security measures you can take to prevent someone from wrecking your FTP. Things like user accounts, restricting access per user or group, etc.

Collaboration With git (via GitHub)

When working in a group, you’ll often find multiple people making changes to the same or multiple files. The Translator will need a place to upload their translation to, the typesetter(s) may need a place where they can upload their typesetting to, etc. For this kind of operation, a git environment may be preferable. With git you can very easily do version control, which allows you to see who made changes to what files and when, and also create an easy place to find all the relevant files and their contents without requiring users to blindly download files to edit them the same way they would when working in an FTP setup.

There are many benefits to using GitHub for your project, but it may also require users to be more tech-savvy than with a regular FTP setup, and for there to be someone to the side who can solve any problems that may arise. Additionally, having an FTP available may still be a good idea for your encoders, since they will still need a place to upload bigger files to, which GitHub is not suited for.

Setting Up a GitHub Repository

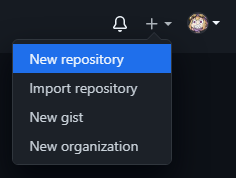

If you are the project leader, you will likely want to be the one to create a repository. For bigger groups, we recommend you start an organization. This will allow you to keep better track of your ongoing projects, as well as assign more administrators who can create and maintain repositories. You can create a repository by pressing the + button on the top-right of the page and clicking “New repository”.

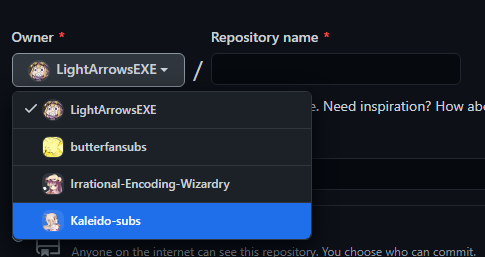

You can then create a new repository under your own username or an organization you have writing access to.

Give your repository a name, and decide to make your repo either public or private. You may also want to add a README if you want to share additional information about the project, like how to build it, or links to your group’s website for example. You can then create the repository by pressing “Create repository”.

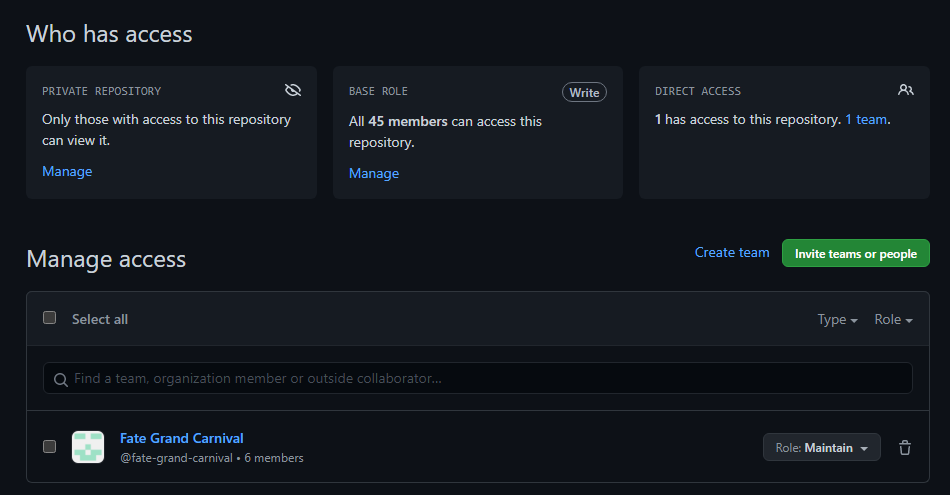

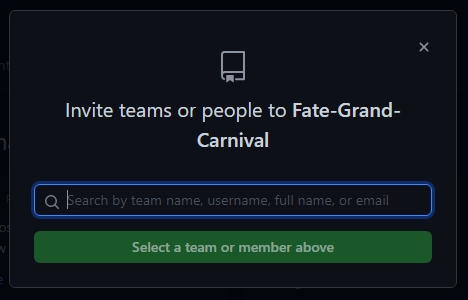

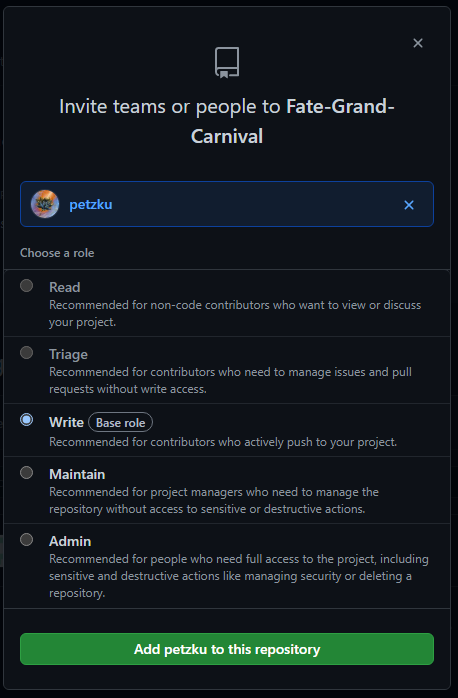

If you’re working with multiple users, you’ll want to make sure you to add them to the repository as well. To do this you go to your repository’s settings, and then click on “Manage access”.

Here you can manage what users (and teams in an organization) have access to your repository. When inviting someone, you’ll want to make sure you give them the necessary permissions. If they’re a team member, give them write access.

Basic Actions

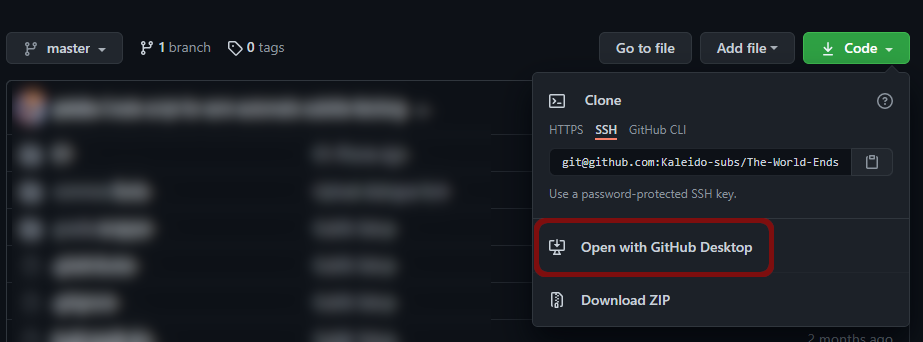

If you are new to GitHub, we highly recommend you use the GitHub desktop client (this also works with other, similar platforms). This guide was written under the assumption that most users will be using this client.

Cloning

After installing the desktop client,

open it and log in.

You can then clone the repository to your system by going to File > Clone repository,

or by going to the repository on GitHub and going Code > Open with GitHub Desktop.

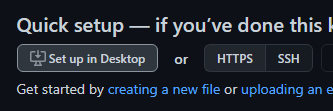

If the repository has not been set up yet, you can set it up in your desktop client through the link on the repository. This will automatically open up the cloning menu in your desktop client.

Syncing File Changes

When editing files from a git repository, git will remember and verify what files have been changed. Before making any commits however, you’ll want to first double-check that you have every file pulled to your local repository. Click “Fetch origin” at the top, and pull in any newly-pushed commits made by other users.

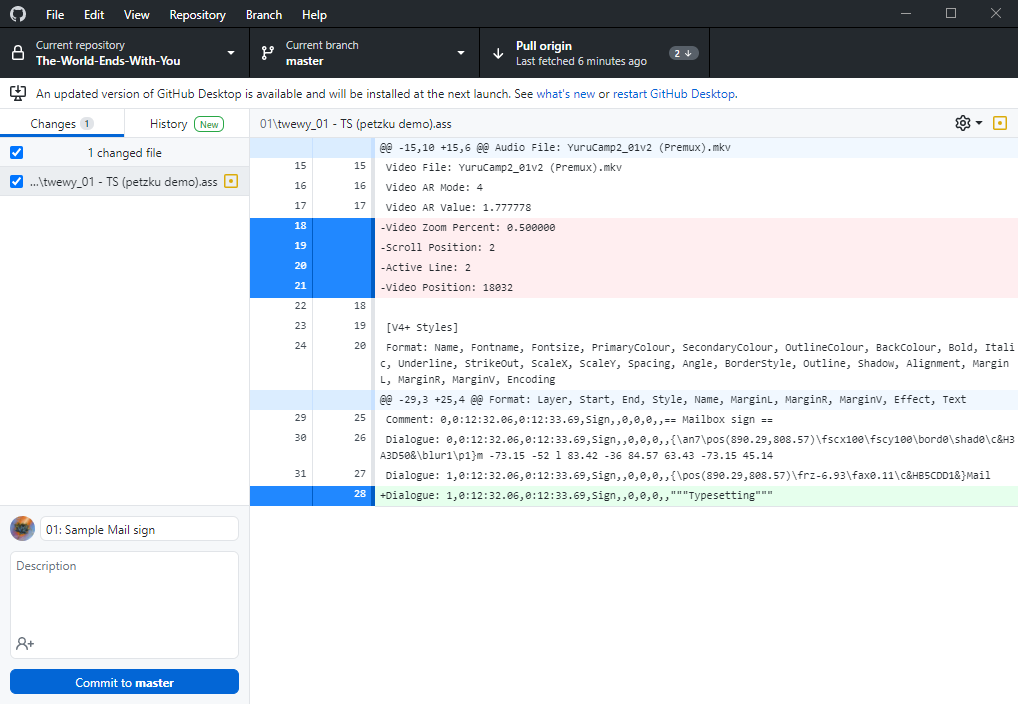

Now you can verify your own changes and commit the files you want to upload/update in the repository. The desktop client will additionally give you a very useful display of what is different between your file and the latest version of the file you pulled in from the repo.

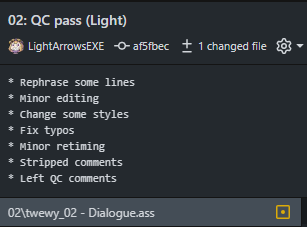

To make things easier for your teammates,

you’ll want to make sure to give your commit a helpful title and description if necessary.

An example of a helpful commit title would be an episode number and what you did in the commit.

For example,

03: Typeset train sign or 08: Edited Part A.

The description can contain more detailed information,

like what exactly was changed (if multiple things were changed),

what problems you ran across along the way,

etc.

An example of a description that describes what changes were made in greater detail than a title would.

An example of a description that describes what changes were made in greater detail than a title would.

Finally, press “Commit to master”, and once you’ve finished making all your changes and committed them, press “Push origin”.

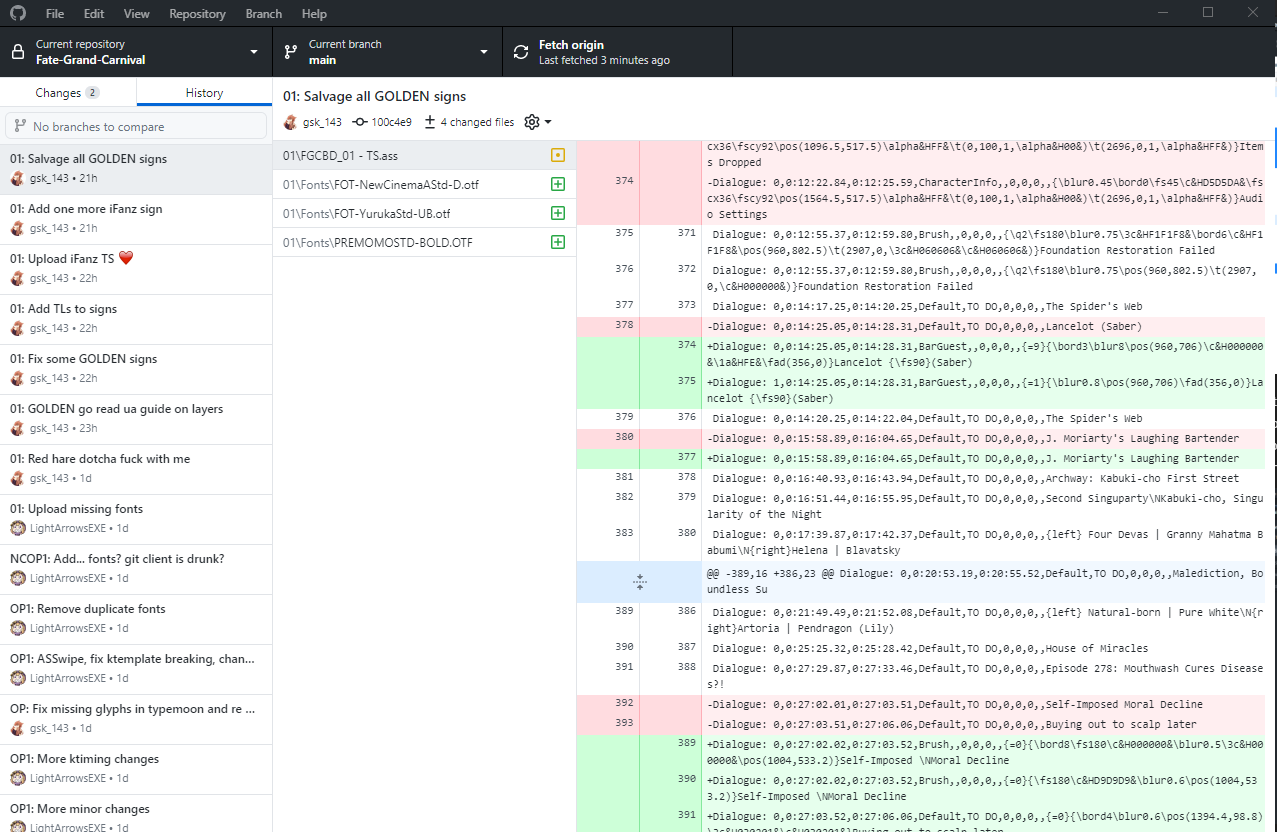

If you want to check the history to see what your teammates have changed, you can view the history by clicking the “History” tab. This will give you a useful oversight of all the commits, as well as their titles/descriptions and what changes have been made to the files.

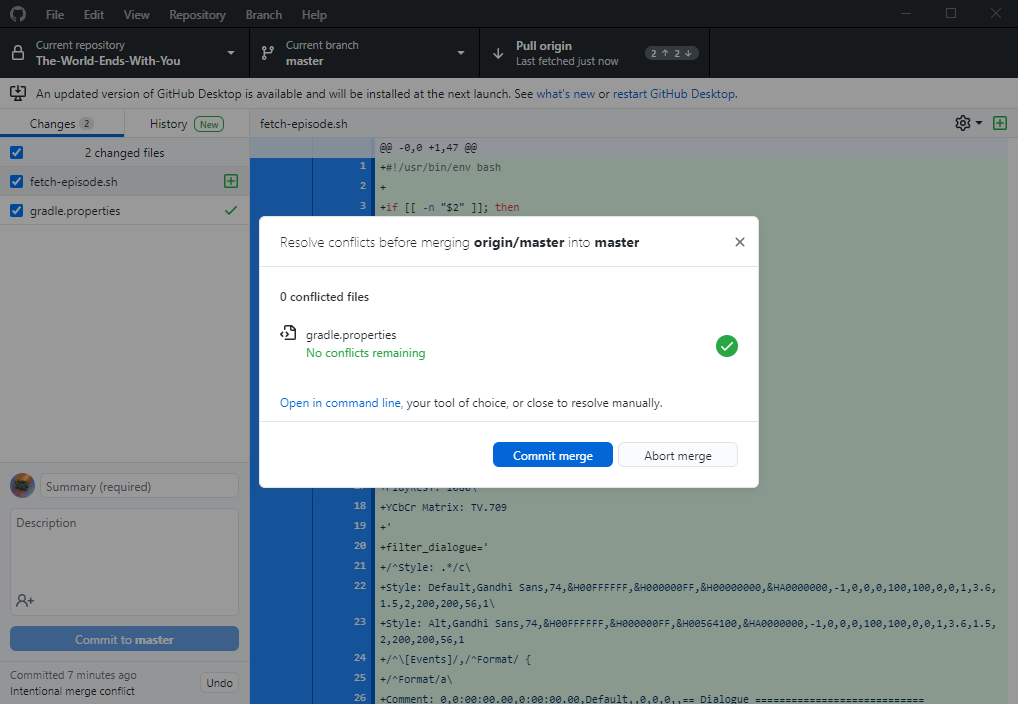

Resolving Merge Conflicts

There are going to be times when multiple users accidentally write to the same file at the same time. This is usually no issue, but you may from time to time run into a merge conflict. This means that changes made by multiple users are incompatible with one another, and will require manual fixing.

To resolve these,

you’ll want to open up the files with merge conflicts in your text editor

(do not use Aegisub for this).

The parts of the file with conflicts will have a <<<<<<< HEAD before them,

and a >>>>>>> at the end of them.

The differences will be separated by a ========.

You’ll want to look over the differences,

and change them however necessary for your final project.

Finally, you’ll want to remove the conflict marker lines,

so all the <<<<<<< HEAD,

========,

and >>>>>>>’s.

Repeat this until you’ve dealt with every merge conflict,

and then go back to the desktop client and press “Commit merge”.

Make sure you push your changes after!

Setting Up the Files For Your Project

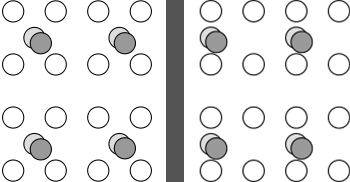

Now that you know how to perform the basic actions, it is important to understand how you can best utilize GitHub. When working in a group, it is important you don’t trip over your teammates. To help with, it is generally recommended you split up the files as necessary to allow people to work in parallel without constantly having to deal with merge conflicts.

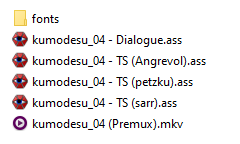

The translator, editor, translation checker, and timer will generally require access to a single file, and it’s important they work sequentially; that means they do their job one at a time in an order they agreed on. There are tools available that help separate the timing job from that setup, but that will be covered in a different guide.

The typesetters will also need to have their own files. This separates them from the main subtitle script, which would otherwise get massive signs added while the other members are working, and be harder to work with for them as a result. If there are multiple typesetters, it’s a good idea to split it up further into individual “TS files”. This allows each typesetter to do their part without interfering with the other typesetter’s work.

An example of how files are typically split.

An example of how files are typically split.

You can further split this however you think works best for your project. You could for example add synced Opening/Ending subs to every directory, or an ASS file with an insert song, or an ASS file with all the ktemplates used in that particular episode. Just make sure it all fits well with your group’s workflow.

Using GitHub For Distribution

While it may be helpful for members to work on GitHub, sometimes people will simply prefer other setups. Even in those situations, GitHub can prove useful for distribution. It can be the place to look for the most up-to-date scripts after release for instance, with errors that were reported post-release fixed. This way people can always update their scripts if necessary without you needing to release a v2, and they also have a convenient hub to report errors.

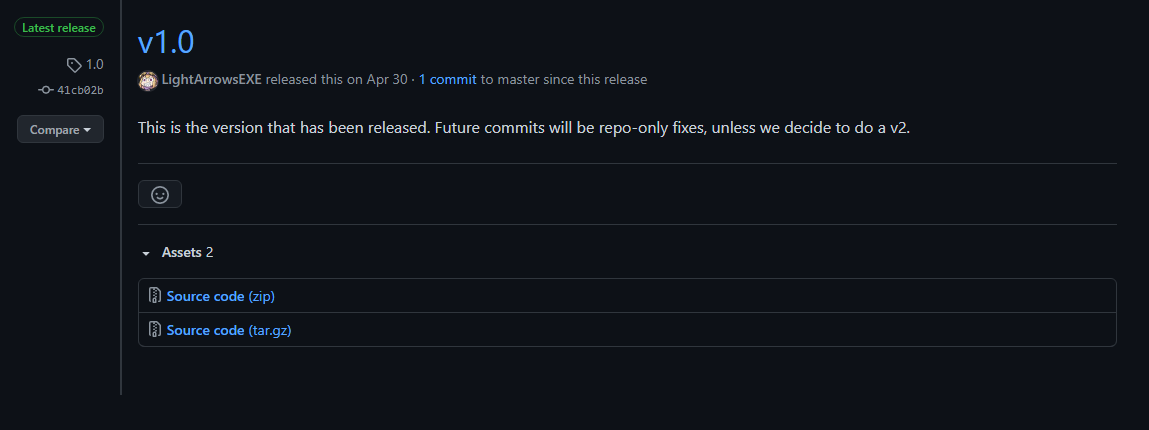

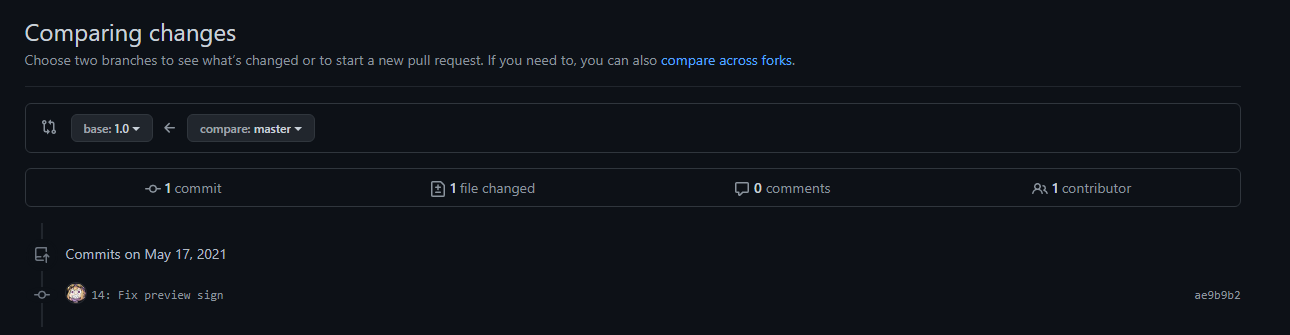

If you decide to use a GitHub repository for this, it is recommended you create “Releases”. This way people can easily see when your project was “finalized”, and it gives a convenient way to see what commits were made after finalization.

v1 release tag

v1 release tag

Commits made since v1

Commits made since v1

Examples

Here are some example fansub repositories:

Preparation and Necessary Software

While the term “encoding” originally just referred to the opposite of decoding—that is, compressing raw video with a video codec—the term has a broader meaning in the context of fansubbing. Here, “encoding” includes the entire process from receiving the source video until the final release. Usual steps are processing or filtering of the original video to remove defects, compressing the video in a way that does not generate new artifacts, transcoding audio to the desired format, and muxing video, audio, subtitles, fonts, and other attachments into a container, such as mkv.

Each of these steps requires different tools which will be listed and explained in the following paragraphs.

It is assumed that you already have a source video at this point, so software like torrent clients, Perfect Dark, Share, or even FileZilla will not be covered. If you don’t have a reliable way to get raws and if your group doesn’t provide them, try finding a source first. Private bittorrent trackers like u2 or SkyeySnow are good starting points.

Processing and Filtering

The Frameserver

In order to process your source video (which will be called “raw” throughout this chapter), you need to import it into a so-called “frameserver”, a software that is designed to process a video frame-by-frame, usually based on a script that defines various filters which will be applied to the video.

Currently, only two widely-known frameservers exist: AviSynth and VapourSynth.

While many (especially older) encoders still use AviSynth, there is no reason to use it if you’re just starting to learn encoding.1 Most AviSynth users only use it because they have years of experience and don’t want to switch.

Since this guide is aimed towards new encoders, and the author has no qualms about imposing his own opinions onto the host of people willing to listen, the guide will focus on VapourSynth. AviSynth equivalents are provided for certain functions where applicable, but the sample code will always be written for VapourSynth.

That being said, the installation of VapourSynth is quite easy. It is strongly recommended to install the 64-bit version of all tools listed here. VapourSynth requires Python 3.8.x or newer. VapourSynth Windows binaries can be found here. Linux users will have to build their own version, but if you’re on Linux, you probably know how to do that. During the installation, you might be prompted to install the Visual C++ Redistributables. Just select “Yes” and the installer will do it for you.

And that’s it. You can test your VapourSynth installation by opening the Python shell and typing:

>>> import vapoursynth

If the installation was not successful, you should receive an error that reads:

Traceback (most recent call last):

File "", line 1, in <module>

ImportError: No module named 'vapoursynth'

In that case, make sure your current Python shell is the correct version (Python version as well as architecture), try restarting your PC, reinstall VapourSynth, or ask for help.

Plugins

In addition to VapourSynth’s core plugins, community-created scripts and plugins can be installed to extend the functionality of the frameserver. These are usually more specific than the universally usable core plugins or they are collections of wrappers and functions. An extensive database of VapourSynth plugins and scripts is available at VSDB. VSDB also offers a GUI for vsrepo, VapourSynth’s official package manager, and a plugin pack (labeled “Portable FATPACK”) that bundles most popular plugins, scripts, and VS-related applications into one archive for ease of download and installation.

An alternative to the latter is eXmendiC’s encode pack, which contains a lot of encoding-related applications and scripts, on top of a broad collection of VapourSynth plugins and scripts. However, the package is not maintained anymore, and may in part include outdated software.

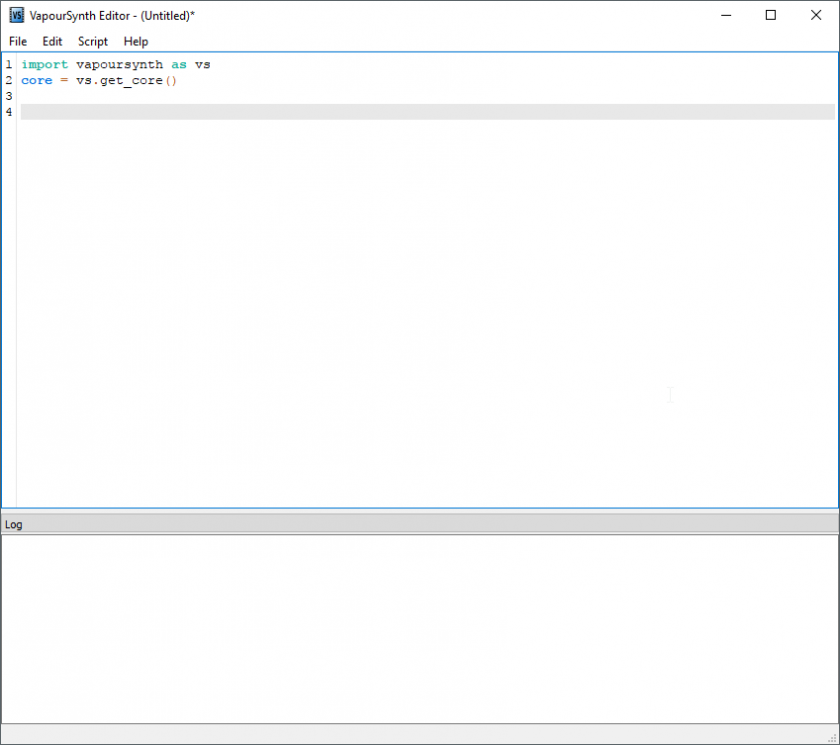

The Editor

Now that you have installed the frameserver, you can start filtering the video. But without an editor, you have no means of previewing the results other than test encodes or raw output into a file. That’s why editors exist. They provide useful features such as autocompletion, tooltips, preview images, and comparisons.

There are four editors that can be used to preview your VapourSynth-Script.

-

VSEdit. It is a small editor software that can run on your PC. It can be downloaded here. It provides an easy and simple GUI to write your VapourSynth scripts.

The main window of VSEdit.

The main window of VSEdit.While it seems to be unstable on some systems, its high performance preview window offsets its problems.

-

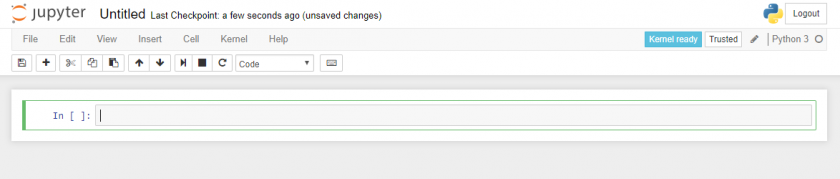

Yuuno. While it is not an editor, Yuuno is an extension to a Python-shell-framework that runs inside your browser. This increases latency, but it gives you a wider range of preview related features while being more stable than VSEdit. It should be noted that Yuuno natively supports remote access, as it is only an extension for Jupyter Notebook.

A Jupyter Notebook.

A Jupyter Notebook. -

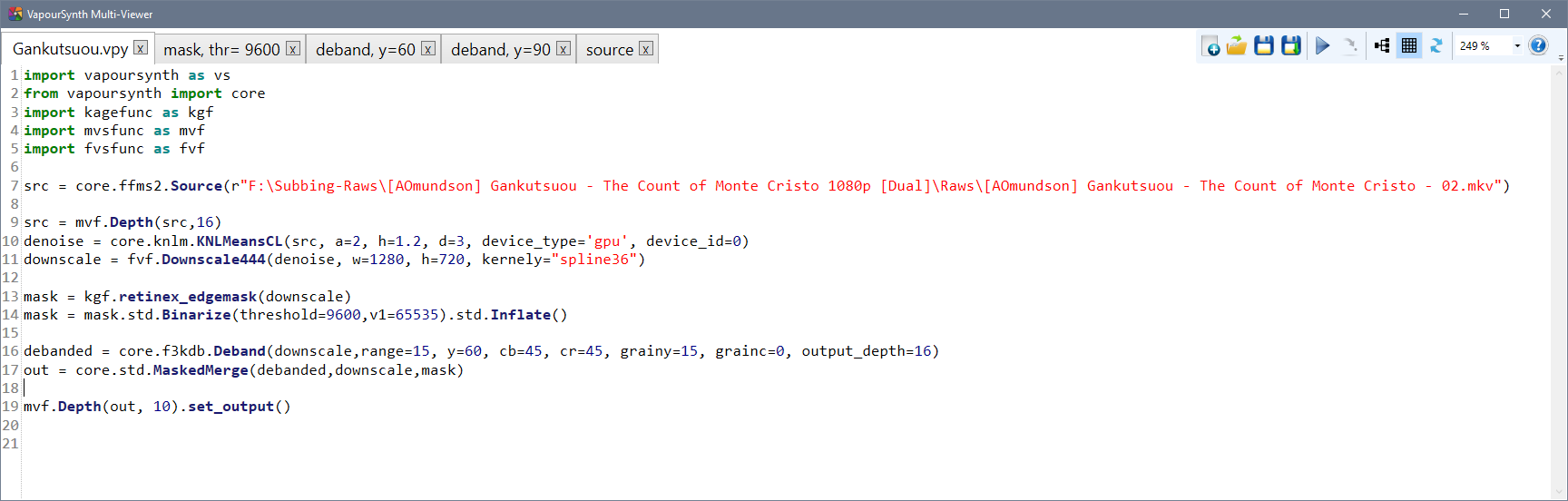

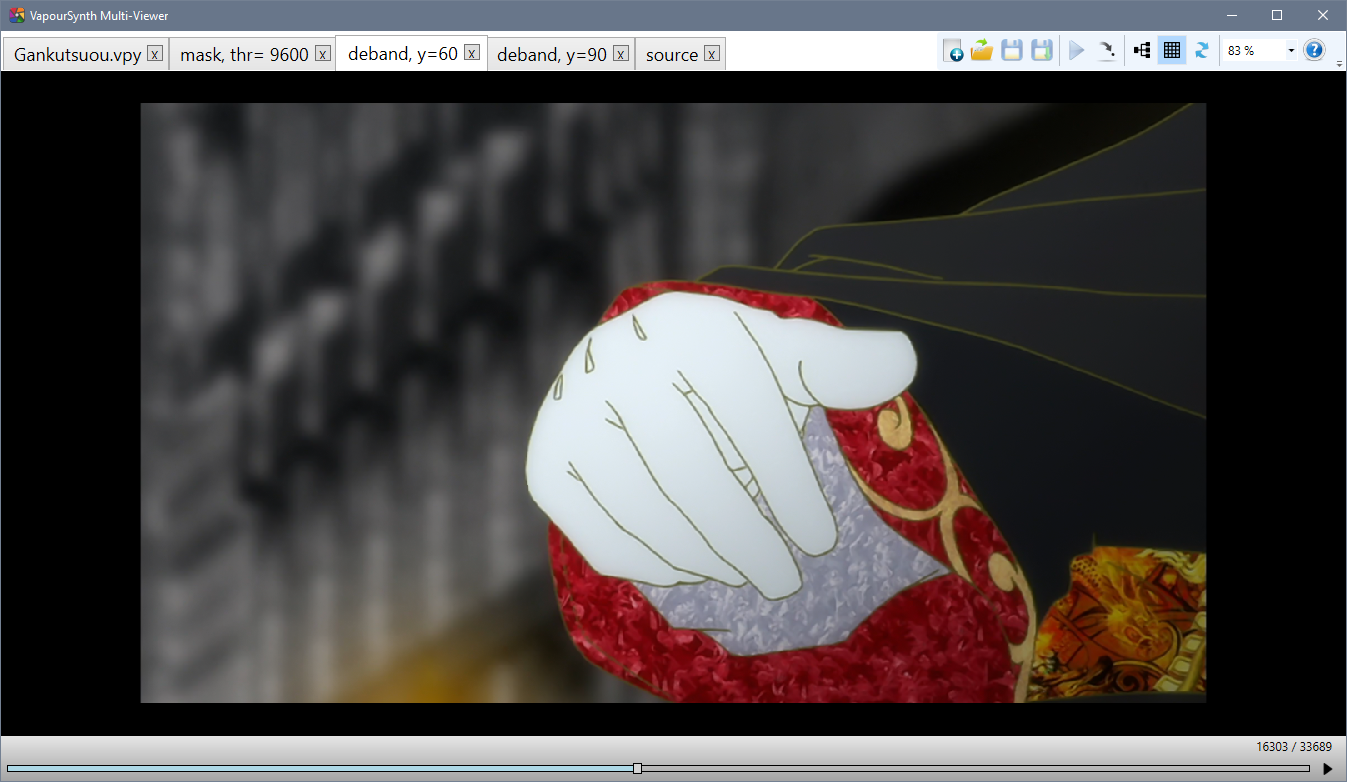

VapourSynth Multi-Viewer. Multi-Viewer is a very simple and elementary previewing tool. While the text editing is vastly inferior to VSEdit’s, and the preview lacks a lot of VSEdit’s advanced features, its tab-based previewing functionality easily outclasses VSEdit’s single preview window, because it makes comparisons between different script versions a lot more convenient and efficient.

In short: very useful for thorough comparisons and filter fine-tuning, not so much for everything else.

VS Multi-Viewer’s editing window.

VS Multi-Viewer’s editing window. VS Multi-Viewer’s preview window.

VS Multi-Viewer’s preview window. -

AvsPmod. This is the editor for AviSynth. It is old and slow but stable. When you are using AviSynth, you are limited to this editor. AvsPmod can handle AviSynth and VapourSynth scripts, however, VapourSynth support was an afterthought and is therefore experimental, unstable, and “hacky”.

Do not use AvsPmod for VapourSynth scripts unless you have a very good reason!

Please rest assured that the author does not impose any editor on you. Instead we will give callouts for some editors. These will be completely optional.

Video Codecs

Once you are happy with the result of your filter chain, you want to save the output to a file. While it is possible to store the script’s output as raw, uncompressed pixel data, that would result in hundreds of gigabytes of data for a single episode. Because of this, we use video codecs to compress the video.

Lossless compression will still result in very big files, so we have to use lossy compression, which means losing some information in the process. As long as you’re not targeting unreasonable bitrates (say, 50 MB per 24 minute episode), this loss of information should be barely noticeable. This process can be quite difficult, so there will be an entire page dedicated to it.

None of the encoders mentioned here need to be installed. Just save the executable(s) somewhere for later.

For now, all you need to know is which codecs exist and which encoders you want to use.

The codec used most commonly is h.264,

and the most popular h.264 encoder is x264.

The most recent builds can be found on VideoLAN’s site.

Pick the most recent build for your operating system.

At the time of writing this,

win64’s recent build is x264-r2935-545de2f.exe

from 25-Sep-2018.

(Notice: the 10-bit binaries are no longer separate from the 8-bit

as of 24 Dec 2017,

meaning the releases with -10b can be ignored)

You can also build it locally from the public repository.

It used to be that different versions,

namely kmod and tmod,

were required for certain encoding features

such as aq-mode 3.

However, most relevant features have been

added to the upstream x264 builds.

Because of this, kmod is now unmaintained.

tmod is still being updated with changes from new x264 versions,

and it provides some potentially useful parameters such as --fade-compensate

or --fgo (film grain optimization), as well as additional AQ algorithms

(aq2-mode, aq3-mode, and parameters for these),

which are generally regarded as useless for almost all sources.

The current tmod release can be downloaded from the github page.

A newer, more efficient alternative is HEVC, with x265 being the most popular encoder. It is still in active development and aims for 20-50% lower bitrates with the same quality as x264. It does have its flaws, is a lot slower, and not as widely supported by media players as x264, but it can be a viable alternative, especially if small files are important and encoding time is of secondary importance. Note that many groups will require you to use x264, so ask your group leader before picking this encoder.

Other codecs, such as VP9, are generally not used for fansubbing, so they are not listed here. The same is true for experimental codecs like Daala and AV-1. Encoders made for distributed server encoding, such as Intel’s SVT-AV1 will also not be included.

Audio

Audio formats and how to handle them

Depending on the source you’ll be working with, you may encounter many different audio formats.

On Blu-rays, you will most likely find audio encoded losslessly, in the form of either DTS-HD Master Audio, Dolby TrueHD, or PCM. DTS-HD MA and Dolby THD are proprietary codecs that use lossless compression, while PCM is simply raw, uncompressed PCM data. The usual way to handle these is to re-encode them to other formats—either lossless or lossy, depending on your taste. But first, you need to decode them. The recommended tool for that is FFmpeg. You can find Windows builds and Linux packages on FFmpeg’s official site. It doesn’t need to be installed—you can just extract it somewhere. But, since it is useful for many different tasks, adding it to the system PATH is recommended.

When working with WEB and TV sources, you will most likely have only lossy audio available. The most common codecs here are AC-3, E-AC-3 and AAC.

Avoid re-encoding lossily compressed audio

Lossily compressed audio should generally not be re-encoded. The proper way to handle them is to remux (i.e. copy) them to the final file. Re-encoding lossy audio into a different lossy audio format cannot improve the audio quality for barely improved compression (in relation and assuming a lower bitrate), while encoding into a lossless audio format is just wasting bandwidth. Your source file will always have the most data available and using a lossless format for that can not generate data lost from the previous compression from thin air.

Which codecs to use?

Once you have your lossless files decoded, you need to encode them. Depending on your taste, you can choose a lossy or lossless codec. The two most widely accepted codecs in fansubbing community are FLAC (lossless) and AAC (lossy), but recently opus (also lossy) is gaining some popularity, too.

The recommended encoder for FLAC is the official one. Download Windows builds from xiph’s website. Most Linux distributions should have FLAC in their package repositories.

The recommended and most widely used AAC encoder is qaac, available on its official site. Nero and Fraunhofer FDK aren’t really that much worse, so you can use them if you really want. Other AAC encoders are discouraged, since they provide inferior results.

There is also opus, which is gaining some popularity recently. It is currently the most efficient lossy codec, and it’s completely FOSS if you’re into that. The recommended opus encoder is the official one, contained in the opus-tools package.

Just as with video, these encoders don’t need to be installed. Qaac will require some configuration, tho.

Other codecs are generally not recommended. Formats like Monkey’s Audio and TAK provide very little gain over FLAC, while not being as widely supported, and—in the case of TAK—closed source. DTS-HD MA and Dolby THD are much less efficient than FLAC, and are also closed source. MP3 is simply obsolete, and Vorbis has been superseded by opus. DTS and AC-3 provide even worse compression than MP3, and don’t have any reasonable, free encoders. In short—don’t bother, unless you really have to, for some reason.

Lossless or lossy?

This is entirely dependent on you. Some people like the idea of having a (theoretically) perfect copy of the master audio file, don’t mind the increase in size, and state that lossless is the only way to go when archiving. Others prefer smaller file sizes, knowing that the difference—assuming high enough bitrate—won’t be audible anyway. And they both have a point.

So, do some ABX testing and decide for yourself.

However, remember that you should not re-encode lossily compressed audio into a lossless format.

MKVToolNix

You probably have at least three files now—that being the video, audio, and subtitles—and you need to combine all of them into a single file. This process is called muxing.

MKVToolNix is used to mux all parts of the final output into an mkv container. Most people use MKVToolNix GUI, which provides a graphical user interface to mux video, audio, chapters, fonts, and other attachments into an mkv file. Installation instructions for virtually any platform can be found on their website.

It is possible to use other containers, but Matroska has become the standard for video releases due to its versatility and compatibility.

It should be noted that the author strongly disagrees with this sentiment. The two have a lot in common, and any capable AviSynth encoder could reach a similar level in Vapoursynth within a few months, maybe even weeks. At least I’m honest, okay?

Basics and General Workflow

Preparation

downloading a source, looking at the video, some decisions (resolution(s) for the release, audio codec, group-specific requirements)

Writing the Script

imports, source filter (mention lsmash, ffms2), examples for resizing, debanding, AA. with images if possible

Encoding the Result

$ vspipe.exe script.vpy -y - | x264.exe --demuxer y4m --some example --parameters here --output video.264 -

Editors for VapourSynth usually have inbuilt support for encoding

scripts you wrote. Use %encode --y4m <clip_variable> in Yuuno or the GUI

provided by VSEdit.

Transcoding Audio

As said earlier, only qaac will require configuration, everything else can simply be extracted wherever you like. Nonetheless, it is easier to add every binary you use to the PATH environment variable, and the rest of this guide will assume you’ve done exactly that.

Decoding audio with FFmpeg and piping it out

Basic ffmpeg usage is simple. You just need to specify your input file and your desired output file, like this:

ffmpeg -i "input.dtshd" "output.wav"

This command will decode the DTS-HD MA audio file and encode it as a WAVE file. This command doesn’t specify any options so ffmpeg will resort to using its defaults.

For transcoding from DTS-HD MA only one option is needed:

ffmpeg -i "input.dtshd" -c:a pcm_s24le "output.wav"

The -c:a pcm_s24le parameter will tell ffmpeg to encode its output with a depth of 24 bits.

If your source file is 16 bits,

change this parameter to pcm_s16le or simply skip it

because 16 bits is the default.

16 bits per sample are, in almost all situation and most definitely also for anime, more than enough data points to store audio data. Thus, you should use 16 bits per sample in your output formats and only use 24 bits for the intermediate WAVE file, iff your source file already used 24 bits to begin with.

Refer also to the following article:

The command above will decode the source file and save a resulting WAVE file on your drive. You can then encode this WAVE file to a FLAC or AAC file, but there is a faster and more convenient way to do that: piping. Piping skips the process of writing and reading the data to and from a file and simply sends the data straight from one program to another.

To pipe from ffmpeg, specify the output format as WAVE using the -f option,

replace the output filename with a hyphen and place a pipe symbol at the end,

which will be used to separate the ffmpeg command from your encoder command,

like this:

ffmpeg -i "input.dtshd" -c:a pcm_s24le -f wav - | {encoder command}

Encoding to FLAC

To encode to FLAC, we will use the flac command line program:

flac -8 --ignore-chunk-sizes --bps 16 "input.wav" -o "output.flac"

-8 sets the encoding level to 8, the maximum level.

This will result in the best compression,

although at the expense of encoding speed.

FLAC encoding is fast, so just stick with level 8.

--ignore-chunk-sizes is needed

because the WAVE format only supports audio data up to 4 GiB.

This is a way to work around that limitation.

It will ignore the length field in the header of the WAVE file,

allowing the FLAC encoder to read files of any size.

--bps 16 specifies the “bits per sample” to be 16.

As noted earlier,

16 bits are enough for our purposes

and FLAC additionally has the downside

that all 24-bit samples are padded to 32-bit samples in the format,

meaning 25% of the storage used is completely wasted.

To encode audio piped from ffmpeg,

replace the input filename with a hyphen

and place the whole command after the ffmpeg command,

like this:

ffmpeg -i "input.dtshd" -c:a pcm_s24le -f wav - | flac -8 --ignore-chunk-sizes --bps 16 - -o "output.flac"

Encoding to AAC

First, set up qaac:

- Go to its download page and download the newest build

(2.70 at the time of writing) and

makeportable.zip. - Extract the

x64folder wherever you wantqaacto be, then extract contents ofmakeportable.zipinside it. - Download the iTunes setup file (

iTunes64Setup.exe) and move it to thex64folder. - Run the

makeportable.cmdscript - You are done. You can now delete the iTunes installation file.

To encode from a file, use the following command:

qaac64 --tvbr 91 --ignorelength --no-delay "input.wav" -o "output.m4a"

The --tvbr 91 option sets the encoding mode to True Variable Bitrate

(in other words, constant quality)

and sets the desired quality.

qaac has only 15 actual quality steps in intervals of 9 (0, 9, 18, … 127).

The higher the number, the higher the resulting bitrate will be.

The recommended value is 91, which will result in a bitrate

of about 192 kbps on 2.0 channel files,

enough for complete transparency in the vast majority of cases.

--ignorelength performs the same function as --ignore-chunk-sizes in FLAC.

--no-delay is needed for proper audio/video sync1.

To encode audio piped from ffmpeg,

replace the input filename with a hyphen

and place the whole command after the ffmpeg command:

ffmpeg -i "input.dtshd" -c:a pcm_s24le -f wav - | qaac64 --tvbr 91 --ignorelength --no-delay - -o "output.m4a"

Read why in this HydrogenAudio forum post.

Encoding to Opus

Encoding a file with opusenc will look like this:

opusenc --vbr --bitrate 160 --ignorelength "input.wav" "output.opus"

--vbr sets the encoding mode to Variable Bitrate (which is the default but it

never hurts to be explicit),

while --ignorelength does the same thing as in qaac.

As you may have noticed,

opusenc uses bitrate control

rather than some kind of constant quality mode2.

Instead of an abstract number that corresponds to a quality target

like with x264’s CRF mode and qaac’s TVBR mode,

you give the encoder your preferred resulting bitrate and it chooses the constant quality target itself.

The recommended bitrate is 160 kbps for 2.0 channels

and 320 kbps for 5.1.

Encoding audio piped from ffmpeg works the same as for previous encoders—just replace the input filename with a hyphen:

ffmpeg -i "input.dtshd" -c:a pcm_s24le -f wav - | opusenc --vbr --bitrate 160 --ignorelength - "output.opus"

Muxing

mkvtoolnix

Recognizing Video Artifacts

The term “artifact” is used to broadly describe defects or foreign, unwanted elements in a video. There can be any number of causes ranging from lossy compression, improper conversions, to post-processing adjustments like sharpening and resampling. This guide will go over how to identify different types of artifacts, how they occur, and some steps to remove them wherever possible.

Before we dive into this laundry list of problems with no clear solutions, let’s start by recognizing some things that often get mistaken for artifacts.

Not Artifacts

Grain

Aside from film grain, grain is added to videos for a few different reasons. It can be added by the studio to create an effect/change the atmosphere, or it can be added by the disc author/encoder to protect against more harmful artifacts from occurring after encoding (mostly banding and blocking). In excess, it may be considered an artifact, but to us anime encoders of the 21st century it is not an artifact, nor is it something we should be expected to remove.

However, it is often mistaken for “noise”, and for anime and lossy compression, these two things may sometimes be indistinguishable, but the two differ at a fundamental level; grain being added at the discretion of a human being1, and noise being added by lossy compression. See the noise section for more information on the subject.

Badly drawn line art

Bad lines happen, but its hard to say whether it’s worth it to try to fix it. Using awarpsharp or sangnom to fix it will surely lead to disaster.

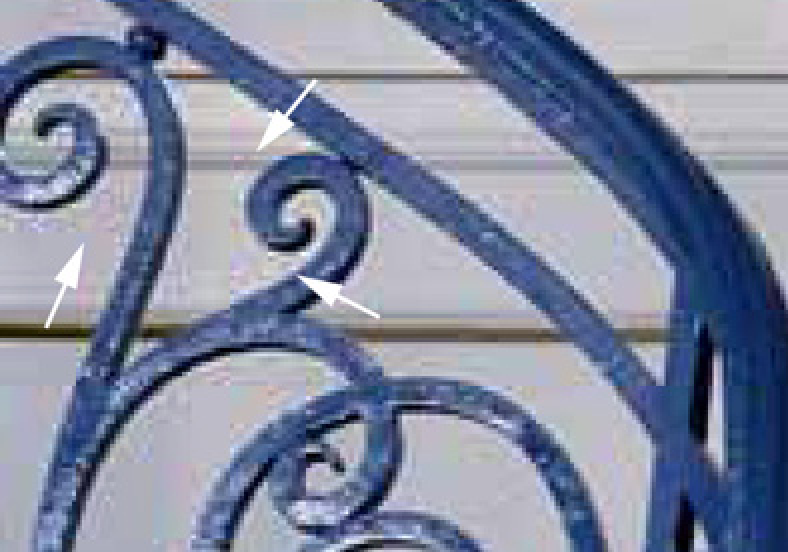

Rakudai-Kishi-no-Cavalry-ep.01.png

Rakudai-Kishi-no-Cavalry-ep.01.png

Chromatic Aberration

Have I been staring at my monitor for too long?

Have I been staring at my monitor for too long?

…and please don’t do this

notevenonce.jpg

notevenonce.jpg

Artifacts

Blocking

DCT block based video compression has come a long way. If you happen to be encoding an MPEG2 TV station or a DVD from a previous decade, you will likely come across something like this:

Blocky Compression

Blocky Compression

Blocky Exaggeration2

Blocky Exaggeration2

From Biamp’s Blog2: “Blocking is known by several names – including tiling, jaggies, mosaicing, pixelating, quilting, and checkerboarding – and it occurs whenever a complex (compressed) image is streamed over a low bandwidth connection (imagine a golf ball being passed through a garden hose). At decompression, the output of certain decoded blocks makes surrounding pixels appear averaged together to look like larger blocks.” (Urban, 2017)

Thankfully most blocking in BDs and web streams nowadays isn’t nearly as bad, and can either be ignored or removed by another stage in your filter chain3. Denoising, debanding, and adding grain will all help to reduce blocking.

Noise

As stated earlier, noise and grain are often used interchangeably. Visually, noise looks uglier and more out of place than grain. It’s less defined and can look blotchy, blocky, or be made up of small dots, whereas grain looks like a proper texture.

In some cases, heavy random grain may be added and then encoded with a low bitrate, resulting in large, noisy, blocky, unstable grain in the video. This is often impossible to remove without noticeable detail loss, and in this case scenefiltering and heavy detail loss are the only two options.

Banding

Example image for banding

Example image for banding

Due to with its many flat areas and smooth gradients, banding is a frequent problem in anime, which is caused by the limits of 8-bit color depth and (especially in low bitrate sources) truncation. The filter GradFun3 is the most common tool for removing it, and is the right tool for the job in average cases.

Some other options are available if this isn’t enough: Particularly large quantization errors, worse banding in dark scenes, and/or banding with grain are cases where experimenting with a few masking/limiting techniques or scene-filtering may be the best option.

Aliasing

Aliasing has a few main causes: interlacing, low bitrate encoding, shitty sharpening, and shitty upscaling (the latter two are often accompanied by ringing).

In the case of resizing, the descale plugin with the right settings may be enough to alleviate the aliasing, but bear in mind that the poorer your source video is, the less effective it will be.

In other cases,

an edge directed interpolation filter,

normally used for deinterlacing,

is used to smooth the edges.

These include nnedi3,

eedi3,

EEDI2,

and sangnom2.

The process involves supersampling and

(re-)interpolating lines in an attempt to minimize detail loss and

maximize continuity.

Masking is very common,

and is always recommended.

There are one or two other methods, the most common of which is a filter called daa. It’s sometimes used, but outside of bad interlacing-related aliasing, it is rarely recommendable.

Ringing

Ringing is something of a blanket term for edge artifacts, including mosquito noise, edge enhancement artifacts, overshoot, or actual ring-like ringing caused by the Gibbs phenomenon.

Mosquito Noise2

Mosquito Noise2

In Blu-ray encodes, the only ringing you’ll be likely to see is upscaling methods such as Lanczos and sharp Bicubic variants, or possibly from badly done sharpening. This is because ringing is primarily a compression artifact, and BDs are generally high bitrate, and even bad BDs don’t tend to ring much.

Thus, you are much more likely to see ringing in low bitrate webrips and MPEG2 TV captures. Despite it being a vastly inferior codec, ringing in MPEG2 sources is actually much easier to deal with than the stubborn ringing in H.264 encodes. In these cases, a simple smoothing based edge-scrubber like HQDeringmod, or a warpsharp-based scrubber similar to EdgeCleaner it has a shitty mask) should all work just fine without too many drawbacks.

In the case of heavily compressed H.264 sources, consider doing a manual masking/limiting/filtering, or scenefiltering with some of HQDeringmod’s safety-checks disabled (change repair from default 24 to 23, or disable entirely)

Haloing

Another edge artifact, this time much cleaner and easier to spot. Halos (especially in anime) are exactly as their title would imply; an even, thick, brightness surrounding lines. In some cases they might even seem like they’re supposed to be there. In Blu-rays this is rarely a problem, but if you do come across it, a masked dehalo_alpha filter such as Fine_Dehalo or a manual filtering of dehalo_alpha with dhhmask (zzfunc.py coming soon™) are recommendable.

Cross-Field Noise

field-noise.jpg

field-noise.jpg

TODO

Underflow / Overflow

While most of the anime produced

use the YUV 8-bit limited range4,

we occasionally find some videos having the “limited range” flag set

while containing full range content.

This often results in oversaturated colors and weird brightness.

Thus, it is strongly recommended

to check the brightness levels

of the 8-bit source with hist.Levels().

Example of underflow (click for comparison)

Example of underflow (click for comparison)

Example of overflow (click for comparison)

Example of overflow (click for comparison)

To fix this problem,

simply use resize like so:

# Only applies to integer pixel formats, since floating point clips are always full range.

clip = clip.resize.Spline36(range_in_s="full", range_s="limited")

or set the “full range” flag on the video, so the values can be interpreted accordingly. Limited range video is more widely supported and players may ignore the “full range” flag, which results in interpreting full range content in a limited context.

In rare cases,

the issue may be more complicated.

For example,

a video may use faulty levels like 0-235 or 16-255

which are neither full nor limited range.

In such cases or similar,

std.Levels can be utilized to correct the range:

# This only applies to 8 bit clips!

# In this example, the input clip uses 0-235 for luma and 0-240 for chroma.

clip = clip.std.Levels(min_in=0, max_in=235, min_out=16, max_out=235, planes=0) # y plane

clip = clip.std.Levels(min_in=0, max_in=240, min_out=16, max_out=240, planes=[1,2]) # u&v planes

Because limited precision with only 8 bit per channel may lead to rounding errors quickly, we prefer adjusting the levels (and our filtering in general) with higher precision, such as 16 bit or float (32 bit). In the example above, you would use the following5:

# 16 bit

clip = clip.std.Levels(min_in=0, max_in=235 << 8, min_out=16 << 8, max_out=235 << 8, planes=0) # y plane

clip = clip.std.Levels(min_in=0, max_in=240 << 8, min_out=16 << 8, max_out=240 << 8, planes=[1,2]) # u&v planes

An example for a case, where shifting the levels with 8 bit precision leads to rounding errors that may result in banding and other weird artifacts, can be seen below.

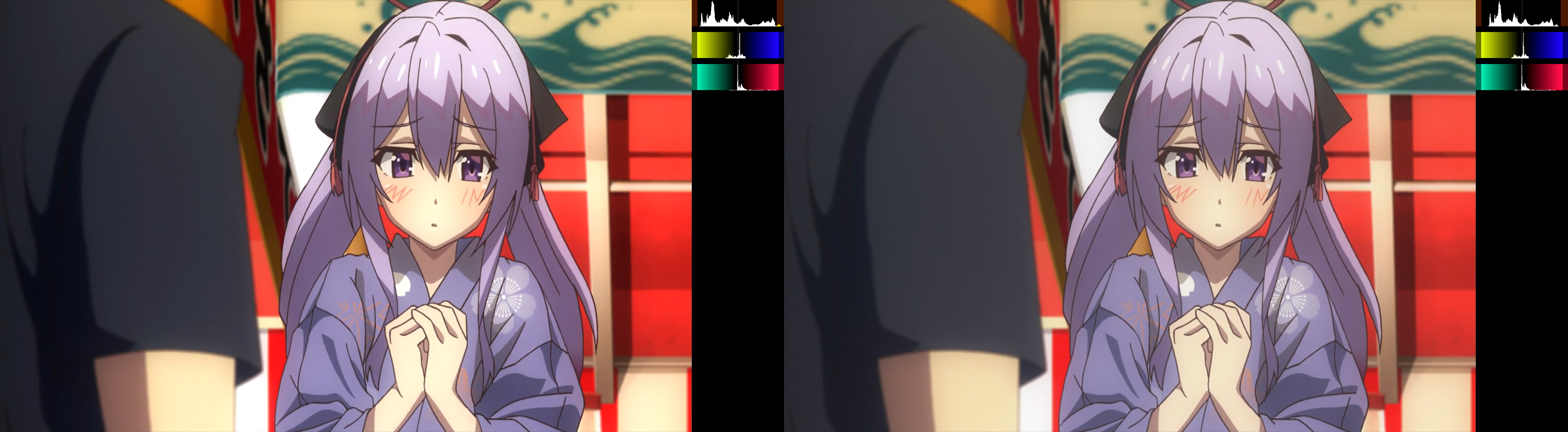

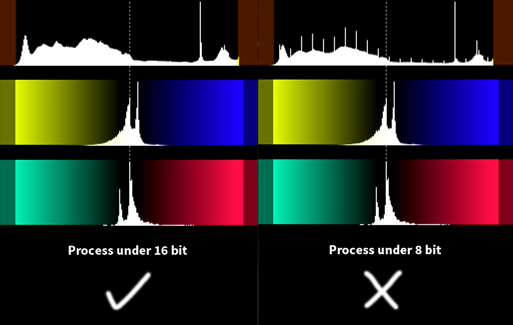

When you see a histogram like this, increase precision.

When you see a histogram like this, increase precision.

At least, in digital anime. Actual grain is different but you most likely aren’t encoding shows from the 90s so who cares.

Urban, J. (2017, February 16). Understanding Video Compression Artifacts. Retrieved from http://blog.biamp.com/understanding-video-compression-artifacts/

Blocking may also occur for other reasons other than compression data loss. Image re-construction with padding can cause very similar looking effects, although this is irrelevant for fansubbing source videos.

The 8-bit limited range (used in rec.601, rec.709, and BT.2020/2100) only defines values within \([16,~235]\) for the Y and \([16,~240]\) for the U and V planes. This means that Y=16 is considered full black and Y=235 full white, while any values outside of that range are clamped virtually (during rendering). U and V behave analogously.

The limited ranges in different precisions are shifted by (multiplied by 2 to the power of) the added bits. For 12-bit, for example, you multiply by \(2^{12-8}\), resulting in \([256,~3760]\) and \([256,~3840]\) respectively. The maximum value in full range is obviously the highest unsigned integer value, so \(2^{12}-1\).

Scenefiltering

Scenefiltering can be hazardous to both your mind and body if used extensively. Avoid scenefiltering if possible.

If you’re an aspiring young encoder or someone who has been around fansubbing for a while, you’ve probably heard the term “scenefiltering”. But what is scenefiltering? As the name suggests, it is simply filtering different scenes or frames of a video clip distinctly.

Creating the base filters

Normally, if you have a source that has great video quality with minimal video artifacts, you can use a simple chain of filters on the entire video without any concern. However, if you have a more complex source with a myriad of video artefacts, you probably don’t want to use the same filters everywhere. For instance, one scene could have heavy banding while another scene might have strong aliasing. If you were to fix both of these issues by using strong filtering over the entire video, it would likely result in detail loss in other scenes, which you do not want. This is where scenefiltering comes in.

As always, you start by importing the VapourSynth module and loading your video source:

import vapoursynth as vs # this can look different based on your editor

core = vs.core

src = core.lsmas.LWLibavSource("source.m2ts")

Next, you need to choose what filtering will be done to the entire clip. Some filtering—like resizing in this example—may need to be put before any other filtering. At this stage, you can also come up with the default filters that need to be in a certain order, but will still be applied to the entire clip. If you can’t come up with anything suitable, don’t fret; you’ll have plenty more chances to filter later.

filtered = core.resize.Bilinear(src, width=1280, height=720)

# will occur at the deband stage, but for entire clip

default_deband = deband(filtered)

Now that you have your common filtering down, you need to create some base filter chains. Go through some random scenes in your source and write down parts of the filtering that best suits those scenes. You should separate these as variables with proper names and sorting (group filters by their type) to keep everything neat and clean. If you do this part well, you will save yourself a lot of time later on, so take your time. At this point, your script should look something like this:

import vapoursynth as vs

core = vs.core

src = core.lsmas.LWLibavSource("source.m2ts")

resized = core.resize.Bilinear(src, width=1280, height=720)

light_denoise = some_denoise_filter(resized)

heavy_denoise = some_other_denoise_filter(resized)

denoised = ...

aa = antialiasing(denoised)

aa = ...

default_deband = deband(aa)

light_deband = deband1(aa)

medium_deband = deband2(aa)

debanded = ...

Adding the frame ranges

Once you’ve done all of that, you’re done with filtering your source—at

least for the most part. Now all you need to do is add

ReplaceFramesSimple calls. For this, you need either the

plugin RemapFrames or

the native Python version in

fvsfunc1.

Rfs is a shorthand for ReplaceFramesSimple

and fvsfunc has the alias rfs.

import vapoursynth as vs

core = vs.core

src = core.lsmas.LWLibavSource("source.m2ts")

resized = core.resize.Bilinear(src, width=1280, height=720)

### Denoising

light_denoise = some_denoise_filter(resized)

heavy_denoise = some_other_denoise_filter(resized)

heavier_denoise = some_stronger_denoise_filter(resized)

denoised = core.remap.Rfs(resized, light_denoise, mappings="")

denoised = core.remap.Rfs(denoised, heavy_denoise, mappings="")

denoised = core.remap.Rfs(denoised, heavier_denoise, mappings="")

### Anti-aliasing

eedi2_aa = eedi2_aa_filter(denoised)

nnedi3_aa = nnedi3_aa_filter(denoised)

aa = core.remap.Rfs(denoised, eedi2_aa, mappings="")

aa = core.remap.Rfs(aa, nnedi3_aa, mappings="")

### Debanding

default_deband = default_deband(aa)

light_deband = deband1(aa)

medium_deband = deband2(aa)

debanded = default_deband # will apply filter to the entire clip

debanded = core.remap.Rfs(debanded, light_deband, mappings="")

debanded = core.remap.Rfs(debanded, med_deband, mappings="")

So you created all your base filters and added Rfs calls. Now what? You still have to perform the most tedious part of this entire process—adding frame ranges to those calls. The basic workflow is quite simple:

-

Go to the start of the scene. View the next 2-3 frames. Go to the end of the scene. View the previous 2-3 frames. Based on this, decide on your filtering for the particular scene. If still in doubt, look at other frames in the scene. Sometimes, you will find that different frames in the same scene require different filtering, but this is quite uncommon.

-

Now that you know what filter to use, simply add the frame range to the respective Rfs call. To add a frame range to Rfs, you need to enter it as a string in the

mappingsparameter. The format for the string is[start_frame end_frame]. If you only want to add a single frame, the format isframe_number. An example should help you understand better:# The following replaces frames 30 to 40 (inclusive) and frame 50 # of the base clip with the filtered clip. filtered = core.remap.Rfs(base, filtered, mappings="[30 40] 50") -

Repeat with the next scene.

When scenefiltering, it is good practice to comment out Rfs calls you’re currently not using because they just make your script slower and eat up memory.

This step can take anywhere from a few minutes to hours, depending on the encoder and the source. Most of the time, the same filters can be reused every episode with some minor changes here and there.

Now you might ask, “Why did I have to create base filters for

everything?” The answer is that these base filters allow other filters

to be added on top of them. Let’s say a scene requires light_denoise

but also needs medium_deband on top of that. Just put the same frame

ranges in their Rfs calls and watch it happen. What if a scene requires

denoising stronger than heavier_denoise ? Simple. Add another denoising

filter instead of heavier_denoise like so:

super_heavy_denoise = ultra_mega_super_heavy_denoise(filtered)

filtered = core.remap.Rfs(filtered, super_heavy_denoise, mappings="[x y]")

Using different denoisers on that same frame range is also possible, but always consider the impacts on performance. Calling a strong, slow denoise filter might still be faster (and better-looking) than calling a weak, faster filter multiple times.

Editor shortcuts / tips

If using VSEdit as your editor,

it can be helpful to use the

built-in bookmark functionality

to find the frame ranges of each scene.

There is a small script that can generate

these bookmarks from your clip inside of VSEdit.

If you already have a keyframe file

(WWXD qp-file or Xvid keyframes)

you can instead use the convert function.

# Editing a script called 'example01.vpy'

import ...

from vsbookmark import generate

generate(clip, 'example01')

#convert('keyframes.txt', 'example01')

clip.set_output()

When previewing your clip, there will now be bookmarks generated on the timeline allowing you to skip to the next scene using the GUI buttons.

The python script may be slower than the plug-in due to the way it calls std.Splice to combine multiple re-mappings. The plug-in on the other hand, directly serves the frames of the second clip, with no calls to Splice. The speed difference will likely only be noticeable with a large amount of re-mappings. So, for the average script, it should be unnoticeable.

Masking, Limiting, and Related Functions

There are filters which change the video in various ways, and then there are ways to change the filtering itself. There are likely hundreds of different techniques at your disposal for various situations, using masks to protect details from smoothing filters, blending two clips with different filtering applied, and countless others—many of which haven’t been thought of yet. This article will cover:

- Masking and Merging

- Limiting

- Reference clips

- Expressions and Lookup Tables

- Runtime functions

- Pre-filtering

Masking

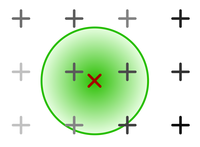

Masking refers to a broad set of techniques used to merge multiple clips. Usually one filtered clip is merged with a source clip according to an overlay mask clip. A mask clip specifies the weight for each individual pixel according to which the two clips are merged; see MaskedMerge for details.

In practice, masks are usually used to protect details, texture, and/or edges from destructive filtering effects like smoothing; this is accomplished by masking the areas to protect, e.g. with an edgemask, and merging the filtered clip with the unfiltered clip according to the mask, such that the masked areas are taken from the unfiltered clip, and the unmasked areas are taken from the filtered clip. In effect, this applies the filtering only to the unmasked areas of the clip, leaving the masked details/edges intact.

Mask clips are usually grayscale,

i.e. they consist of only one plane and thus contain no color information.

In VapourSynth, such clips use the color family GRAY

and one of these formats:

GRAY8 (8 bits integer),

GRAY16 (16 bits integer),

or GRAYS (single precision floating point).

std.MaskedMerge

This is the main function for masking that performs the actual merging. It takes three clips as input: two source clips and one mask clip. The output will be a convex combination of the input clips, where the weights are given by the brightness of the mask clip. The following formula describes these internals for each pixel:

$$

\mathrm{output} = \mathrm{clipa} \times (\mathit{maxvalue} - \mathrm{mask}) + (\mathrm{clip~b} \times \mathrm{mask})

$$

where \(\mathit{max~value}\) is 255 for 8-bit.

In simpler terms: for brighter areas in the mask, the output will come from clip b, and for the dark areas, it’ll come from clip a. Grey areas result in an average of clip a and clip b.

If premultiplied is set to True,

the equation changes as follows:

$$

\mathrm{output} = \mathrm{clipa} \times (\mathit{maxvalue} - \mathrm{mask}) + \mathrm{clip~b}

$$

Manipulating Masks

Building precise masks that cover exactly what you want is often rather tricky. VapourSynth provides basic tools for manipulating masks that can be used to bring them into the desired shape:

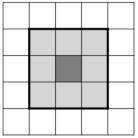

std.Minimum/std.Maximum

The Minimum/Maximum operations replace each pixel with the smallest/biggest value in its 3x3 neighbourhood. The 3x3 neighbourhood of a pixel are the 8 pixels directly adjacent to the pixel in question plus the pixel itself.

Illustration of the 3x3 neighborhood

Illustration of the 3x3 neighborhood

The Minimum/Maximum filters look at the 3x3 neighbourhood of each pixel in the input image and replace the corresponding pixel in the output image with the brightest (Maximum) or darkest (Minimum) pixel in that neighbourhood.

Maximum generally expands/grows a mask because all black pixels adjacent to white edges will be turned white, whereas Minimum generally shrinks the mask because all white pixels bordering on black ones will be turned black.

See the next section for usage examples.

Side note:

In general image processing,

these operations are known as Erosion (Minimum)

and Dilation (Maximum).

Maximum/Minimum actually implement only a specific case

where the structuring element is a 3x3 square.

The built-in morpho plug-in implements the more general case

in the functions morpho.Erode and morpho.Dilate

which allow finer control over the structuring element.

However, these functions are significantly slower than

std.Minimum and std.Maximum.

std.Inflate/std.Deflate

TODO

std.Binarize

Split the luma/chroma values of any clip into one of two values, according to a fixed threshold. For instance, binarize an edgemask to white when edge values are at or above 24, and set values lower to 0:

mask.std.Binarize(24, v0=0, v1=255)

For methods of creating mask clips, there are a few general categories…

Line masks